Pandas Apply Parallel

Pandas is a powerful Python library for data manipulation and analysis, providing data structures and functions that make it easy to manipulate numerical tables and time series. One of the common operations in data processing is applying functions to data. While Pandas provides efficient implementations for many operations, certain tasks can be computationally expensive when dealing with large datasets. To speed up these operations, parallel processing can be utilized. This article explores how to use parallel processing to speed up the apply function in Pandas.

Introduction to Pandas Apply

The apply function in Pandas allows you to apply a function along an axis of the DataFrame or on values of Series. This is extremely useful for applying complex operations across columns or rows. However, by default, apply operates in a single-threaded manner, processing each operation sequentially. This can be slow on large datasets or with computationally intensive functions.

Why Use Parallel Processing?

Parallel processing involves dividing a task into subtasks and processing them simultaneously on multiple processors. This can significantly reduce the overall processing time. In Python, parallel processing can be achieved using multiple libraries such as multiprocessing, concurrent.futures, and third-party libraries like joblib or Dask.

Setting Up Your Environment

Before diving into the examples, ensure you have the necessary libraries installed. You can install Pandas and other required libraries using pip:

pip install pandas joblib

Example 1: Basic Usage of Apply

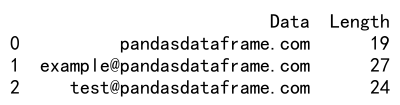

Here’s a simple example of using apply to compute the length of string entries in a DataFrame.

import pandas as pd

# Create a DataFrame

df = pd.DataFrame({

'Data': ['pandasdataframe.com', '[email protected]', '[email protected]']

})

# Function to apply

def compute_length(value):

return len(value)

# Apply function

df['Length'] = df['Data'].apply(compute_length)

print(df)

Output:

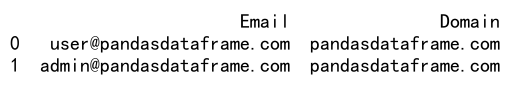

Example 2: Using Apply with a Custom Function

Applying a custom function that converts email addresses to domain names.

import pandas as pd

def extract_domain(email):

return email.split('@')[-1]

df = pd.DataFrame({

'Email': ['[email protected]', '[email protected]']

})

df['Domain'] = df['Email'].apply(extract_domain)

print(df)

Output:

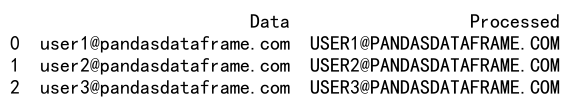

Example 3: Parallelizing Apply with Joblib

Using joblib to parallelize apply operations.

from joblib import Parallel, delayed

import pandas as pd

df = pd.DataFrame({

'Data': ['[email protected]', '[email protected]', '[email protected]']

})

def process_data(data):

return data.upper()

results = Parallel(n_jobs=2)(delayed(process_data)(i) for i in df['Data'])

df['Processed'] = results

print(df)

Output:

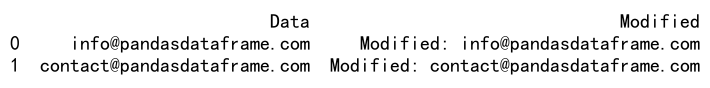

Example 4: Using Concurrent.futures for Parallel Apply

Applying functions in parallel using concurrent.futures.

import pandas as pd

from concurrent.futures import ThreadPoolExecutor

df = pd.DataFrame({

'Data': ['[email protected]', '[email protected]']

})

def modify_data(data):

return f"Modified: {data}"

with ThreadPoolExecutor(max_workers=2) as executor:

results = list(executor.map(modify_data, df['Data']))

df['Modified'] = results

print(df)

Output:

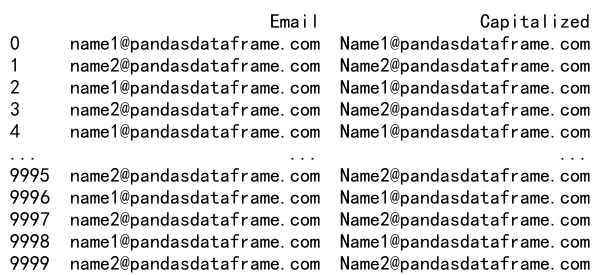

Example 5: Parallel Apply on a Large Dataset

Handling a larger dataset with parallel processing to capitalize each entry.

from joblib import Parallel, delayed

import pandas as pd

data = ['[email protected]', '[email protected]'] * 5000

df = pd.DataFrame(data, columns=['Email'])

def capitalize_email(email):

return email.capitalize()

df['Capitalized'] = Parallel(n_jobs=4)(delayed(capitalize_email)(i) for i in df['Email'])

print(df)

Output:

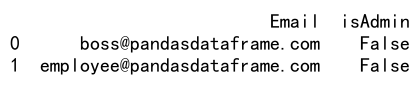

Example 6: Advanced Custom Function with Apply

Applying a more complex function that involves conditional logic.

import pandas as pd

df = pd.DataFrame({

'Email': ['[email protected]', '[email protected]']

})

def check_admin(email):

if 'admin' in email:

return True

return False

df['isAdmin'] = df['Email'].apply(check_admin)

print(df)

Output:

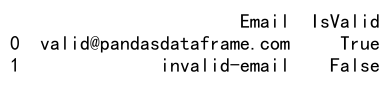

Example 7: Error Handling in Apply Functions

It’s important to handle errors in functions applied to data, especially in a parallel setting.

import pandas as pd

df = pd.DataFrame({

'Email': ['[email protected]', 'invalid-email']

})

def validate_email(email):

try:

username, domain = email.split('@')

return domain == 'pandasdataframe.com'

except ValueError:

return False

df['IsValid'] = df['Email'].apply(validate_email)

print(df)

Output:

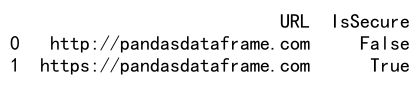

Example 8: Using Apply with Lambda Functions

Lambda functions provide a quick way of writing small functions to be used with apply.

import pandas as pd

df = pd.DataFrame({

'URL': ['http://pandasdataframe.com', 'https://pandasdataframe.com']

})

df['IsSecure'] = df['URL'].apply(lambda x: x.startswith('https'))

print(df)

Output:

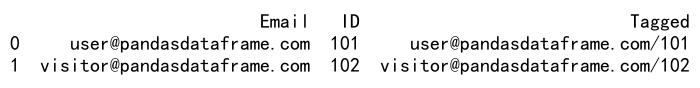

Example 9: Parallel Apply with Multiple Arguments

Sometimes, you might need to pass multiple arguments to the function being applied.

from joblib import Parallel, delayed

import pandas as pd

df = pd.DataFrame({

'Email': ['[email protected]', '[email protected]'],

'ID': [101, 102]

})

def tag_user(email, user_id):

return f"{email}/{user_id}"

results = Parallel(n_jobs=2)(delayed(tag_user)(email, id) for email, id in zip(df['Email'], df['ID']))

df['Tagged'] = results

print(df)

Output:

Pandas Apply Parallel Conclusion

Parallel processing can significantly enhance the performance of data manipulation tasks in Pandas, especially when dealing with large datasets or complex operations. By using libraries like joblib, concurrent.futures, and Dask, you can efficiently apply functions across DataFrame columns or rows in parallel, reducing execution time and improving efficiency. Always consider the overhead of setting up parallel computations and choose the number of workers and partitions wisely to optimize performance.

Pandas Dataframe

Pandas Dataframe