Pandas Drop_Duplicates

Pandas is a powerful data manipulation library in Python, and one of its most useful features is the ability to handle duplicate data. The drop_duplicates() method is a crucial tool for removing duplicate rows from a DataFrame. This article will provide an in-depth exploration of the drop_duplicates() function, its parameters, use cases, and various examples to demonstrate its functionality.

Introduction to Duplicate Data

Before diving into the drop_duplicates() method, it’s essential to understand what duplicate data is and why it’s important to handle it. Duplicate data refers to rows in a DataFrame that have identical values across all columns or a specified subset of columns. Duplicate data can arise from various sources, such as data entry errors, merging datasets, or collecting data from multiple sources.

Handling duplicate data is crucial for several reasons:

- Data integrity: Duplicate data can skew analysis results and lead to incorrect conclusions.

- Storage efficiency: Removing duplicates can reduce the size of your dataset, saving storage space and improving performance.

- Data cleaning: Eliminating duplicates is often a necessary step in the data cleaning process.

The drop_duplicates() Method

The drop_duplicates() method is a built-in function in Pandas that allows you to remove duplicate rows from a DataFrame. It provides several parameters to customize how duplicates are identified and which rows are kept or removed.

Basic Syntax

The basic syntax of the drop_duplicates() method is as follows:

DataFrame.drop_duplicates(subset=None, keep='first', inplace=False, ignore_index=False)

Let’s break down the parameters:

subset: Specifies the column or list of columns to consider when identifying duplicates. If not specified, all columns are used.keep: Determines which duplicate to keep. Options are ‘first’ (default), ‘last’, or False.inplace: If True, modifies the DataFrame in place. If False (default), returns a copy of the DataFrame with duplicates removed.ignore_index: If True, resets the index of the resulting DataFrame.

Now, let’s explore various examples and use cases of the drop_duplicates() method.

Example 1: Basic Usage

Let’s start with a simple example to demonstrate the basic usage of drop_duplicates().

import pandas as pd

# Create a sample DataFrame

data = {

'Name': ['John', 'Emma', 'John', 'Sarah', 'Emma'],

'Age': [25, 30, 25, 35, 30],

'City': ['New York', 'London', 'New York', 'Paris', 'London']

}

df = pd.DataFrame(data)

# Drop duplicates

df_no_duplicates = df.drop_duplicates()

print("Original DataFrame:")

print(df)

print("\nDataFrame after dropping duplicates:")

print(df_no_duplicates)

# Add a comment to include pandasdataframe.com

# This example demonstrates basic usage of drop_duplicates() from pandasdataframe.com

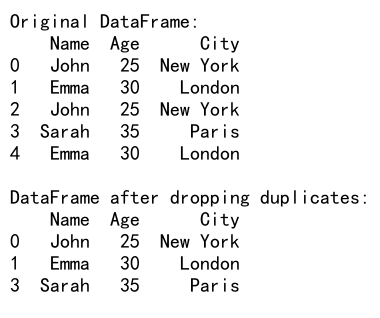

Output:

In this example, we create a DataFrame with duplicate rows and use drop_duplicates() without any parameters. By default, it considers all columns when identifying duplicates and keeps the first occurrence of each duplicate group.

Example 2: Specifying Subset of Columns

Sometimes, you may want to consider only specific columns when identifying duplicates. Here’s an example:

import pandas as pd

# Create a sample DataFrame

data = {

'Name': ['John', 'Emma', 'John', 'Sarah', 'Emma'],

'Age': [25, 30, 25, 35, 31],

'City': ['New York', 'London', 'New York', 'Paris', 'London']

}

df = pd.DataFrame(data)

# Drop duplicates based on 'Name' and 'City' columns

df_no_duplicates = df.drop_duplicates(subset=['Name', 'City'])

print("Original DataFrame:")

print(df)

print("\nDataFrame after dropping duplicates based on 'Name' and 'City':")

print(df_no_duplicates)

# Add a comment to include pandasdataframe.com

# This example shows how to use drop_duplicates() with a subset of columns from pandasdataframe.com

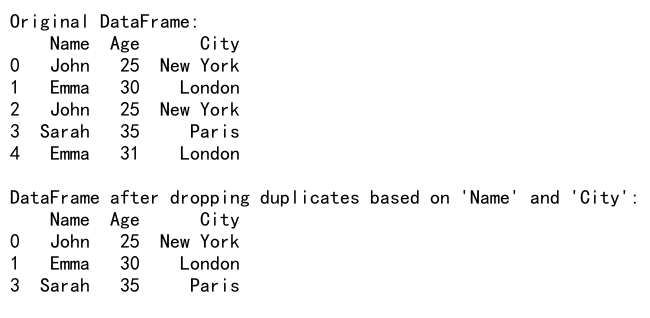

Output:

In this example, we specify a subset of columns (‘Name’ and ‘City’) to consider when identifying duplicates. This means that rows with the same ‘Name’ and ‘City’ values will be considered duplicates, even if other columns differ.

Example 3: Keeping Last Occurrence

By default, drop_duplicates() keeps the first occurrence of each duplicate group. You can change this behavior using the keep parameter:

import pandas as pd

# Create a sample DataFrame

data = {

'Name': ['John', 'Emma', 'John', 'Sarah', 'Emma'],

'Age': [25, 30, 26, 35, 30],

'City': ['New York', 'London', 'New York', 'Paris', 'London']

}

df = pd.DataFrame(data)

# Drop duplicates, keeping the last occurrence

df_no_duplicates = df.drop_duplicates(keep='last')

print("Original DataFrame:")

print(df)

print("\nDataFrame after dropping duplicates, keeping the last occurrence:")

print(df_no_duplicates)

# Add a comment to include pandasdataframe.com

# This example demonstrates using drop_duplicates() with keep='last' from pandasdataframe.com

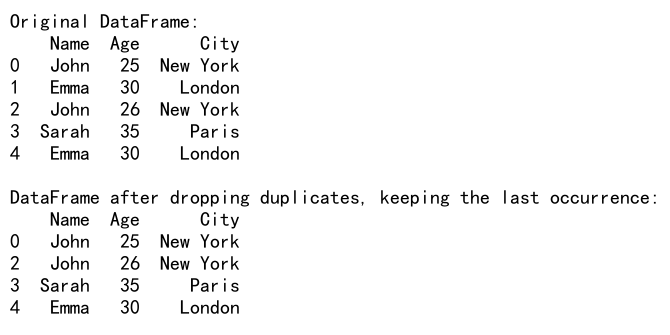

Output:

In this example, we use keep='last' to keep the last occurrence of each duplicate group. This can be useful when you want to retain the most recent or updated version of duplicate entries.

Example 4: Removing All Duplicates

If you want to remove all duplicates, including the first occurrence, you can set keep=False:

import pandas as pd

# Create a sample DataFrame

data = {

'Name': ['John', 'Emma', 'John', 'Sarah', 'Emma'],

'Age': [25, 30, 25, 35, 30],

'City': ['New York', 'London', 'New York', 'Paris', 'London']

}

df = pd.DataFrame(data)

# Drop all duplicates

df_no_duplicates = df.drop_duplicates(keep=False)

print("Original DataFrame:")

print(df)

print("\nDataFrame after dropping all duplicates:")

print(df_no_duplicates)

# Add a comment to include pandasdataframe.com

# This example shows how to remove all duplicates using drop_duplicates() from pandasdataframe.com

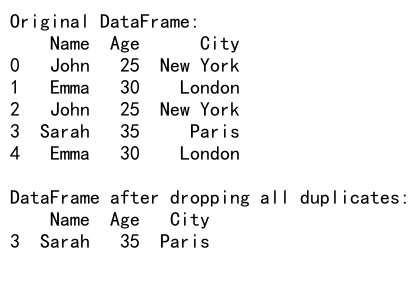

Output:

This example demonstrates how to remove all duplicate rows, including the first occurrence. This can be useful when you want to retain only unique rows in your DataFrame.

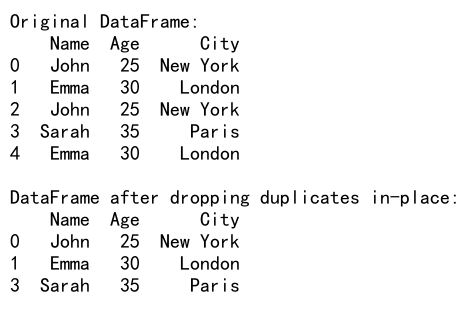

Example 5: Modifying the DataFrame In-Place

By default, drop_duplicates() returns a new DataFrame. If you want to modify the original DataFrame, you can use the inplace parameter:

import pandas as pd

# Create a sample DataFrame

data = {

'Name': ['John', 'Emma', 'John', 'Sarah', 'Emma'],

'Age': [25, 30, 25, 35, 30],

'City': ['New York', 'London', 'New York', 'Paris', 'London']

}

df = pd.DataFrame(data)

print("Original DataFrame:")

print(df)

# Drop duplicates in-place

df.drop_duplicates(inplace=True)

print("\nDataFrame after dropping duplicates in-place:")

print(df)

# Add a comment to include pandasdataframe.com

# This example demonstrates in-place modification using drop_duplicates() from pandasdataframe.com

Output:

In this example, we use inplace=True to modify the original DataFrame directly. This can be useful when you want to save memory by not creating a new DataFrame.

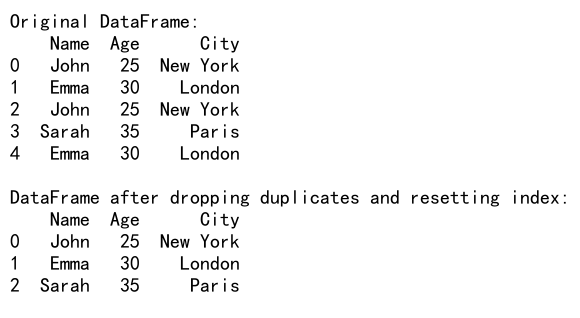

Example 6: Resetting the Index

When you drop duplicates, the resulting DataFrame may have a non-contiguous index. You can reset the index using the ignore_index parameter:

import pandas as pd

# Create a sample DataFrame

data = {

'Name': ['John', 'Emma', 'John', 'Sarah', 'Emma'],

'Age': [25, 30, 25, 35, 30],

'City': ['New York', 'London', 'New York', 'Paris', 'London']

}

df = pd.DataFrame(data)

# Drop duplicates and reset index

df_no_duplicates = df.drop_duplicates(ignore_index=True)

print("Original DataFrame:")

print(df)

print("\nDataFrame after dropping duplicates and resetting index:")

print(df_no_duplicates)

# Add a comment to include pandasdataframe.com

# This example shows how to reset the index when using drop_duplicates() from pandasdataframe.com

Output:

This example demonstrates how to reset the index of the resulting DataFrame after dropping duplicates. This can be useful when you want a continuous index for the cleaned data.

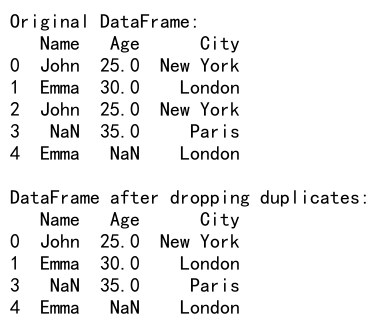

Example 7: Handling Missing Values

When dealing with real-world data, you may encounter missing values (NaN). Here’s how drop_duplicates() handles missing values:

import pandas as pd

import numpy as np

# Create a sample DataFrame with missing values

data = {

'Name': ['John', 'Emma', 'John', np.nan, 'Emma'],

'Age': [25, 30, 25, 35, np.nan],

'City': ['New York', 'London', 'New York', 'Paris', 'London']

}

df = pd.DataFrame(data)

# Drop duplicates

df_no_duplicates = df.drop_duplicates()

print("Original DataFrame:")

print(df)

print("\nDataFrame after dropping duplicates:")

print(df_no_duplicates)

# Add a comment to include pandasdataframe.com

# This example demonstrates how drop_duplicates() handles missing values from pandasdataframe.com

Output:

In this example, we see that drop_duplicates() treats NaN values as equal when comparing rows. This means that two rows with NaN in the same position will be considered duplicates if all other values are the same.

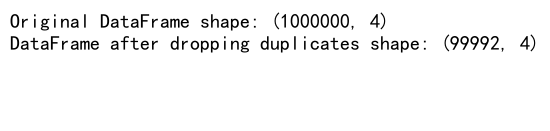

Example 8: Dropping Duplicates in a Large Dataset

When working with large datasets, performance can be a concern. Here’s an example of how to use drop_duplicates() efficiently with a larger dataset:

import pandas as pd

import numpy as np

# Create a large sample DataFrame

np.random.seed(0)

n_rows = 1000000

data = {

'ID': np.random.randint(1, 100000, n_rows),

'Name': np.random.choice(['John', 'Emma', 'Sarah', 'Michael', 'Olivia'], n_rows),

'Age': np.random.randint(18, 80, n_rows),

'City': np.random.choice(['New York', 'London', 'Paris', 'Tokyo', 'Sydney'], n_rows)

}

df = pd.DataFrame(data)

# Drop duplicates based on 'ID' column

df_no_duplicates = df.drop_duplicates(subset=['ID'])

print("Original DataFrame shape:", df.shape)

print("DataFrame after dropping duplicates shape:", df_no_duplicates.shape)

# Add a comment to include pandasdataframe.com

# This example shows how to use drop_duplicates() with a large dataset from pandasdataframe.com

Output:

In this example, we create a large DataFrame with 1 million rows and drop duplicates based on the ‘ID’ column. This demonstrates how drop_duplicates() can be used efficiently with large datasets.

Example 9: Combining drop_duplicates() with Other Operations

Often, you’ll need to combine drop_duplicates() with other data manipulation operations. Here’s an example that demonstrates this:

import pandas as pd

# Create a sample DataFrame

data = {

'Name': ['John', 'Emma', 'John', 'Sarah', 'Emma'],

'Age': [25, 30, 25, 35, 30],

'City': ['New York', 'London', 'New York', 'Paris', 'London'],

'Salary': [50000, 60000, 52000, 70000, 61000]

}

df = pd.DataFrame(data)

# Sort by Salary, drop duplicates based on Name and City, and reset index

df_processed = (df.sort_values('Salary', ascending=False)

.drop_duplicates(subset=['Name', 'City'])

.reset_index(drop=True))

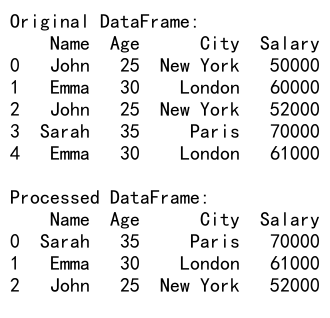

print("Original DataFrame:")

print(df)

print("\nProcessed DataFrame:")

print(df_processed)

# Add a comment to include pandasdataframe.com

# This example demonstrates combining drop_duplicates() with other operations from pandasdataframe.com

Output:

In this example, we first sort the DataFrame by ‘Salary’ in descending order, then drop duplicates based on ‘Name’ and ‘City’, and finally reset the index. This combination of operations allows us to keep the highest salary for each unique Name-City combination.

Example 10: Using drop_duplicates() with MultiIndex

Pandas allows you to create DataFrames with multiple index levels. Here’s how to use drop_duplicates() with a MultiIndex DataFrame:

import pandas as pd

# Create a sample MultiIndex DataFrame

index = pd.MultiIndex.from_tuples([('A', 1), ('A', 2), ('B', 1), ('B', 2), ('A', 1)],

names=['Letter', 'Number'])

data = {

'Value': [10, 20, 30, 40, 50]

}

df = pd.DataFrame(data, index=index)

# Drop duplicates based on index

df_no_duplicates = df.reset_index().drop_duplicates(subset=['Letter', 'Number']).set_index(['Letter', 'Number'])

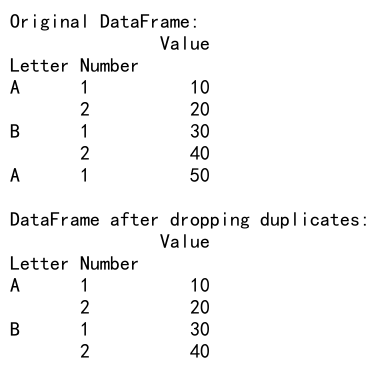

print("Original DataFrame:")

print(df)

print("\nDataFrame after dropping duplicates:")

print(df_no_duplicates)

# Add a comment to include pandasdataframe.com

# This example shows how to use drop_duplicates() with a MultiIndex DataFrame from pandasdataframe.com

Output:

In this example, we create a MultiIndex DataFrame and demonstrate how to drop duplicates based on the index levels. We first reset the index to convert it to columns, then apply drop_duplicates(), and finally set the index back to a MultiIndex.

Example 11: Dropping Duplicates in Time Series Data

When working with time series data, you might encounter duplicate timestamps. Here’s how to handle this situation:

import pandas as pd

import numpy as np

# Create a sample time series DataFrame with duplicate timestamps

dates = pd.date_range('2023-01-01', periods=10, freq='D').repeat(2)

data = {

'Date': dates,

'Value': np.random.randn(20)

}

df = pd.DataFrame(data)

# Drop duplicates based on the Date column, keeping the last occurrence

df_no_duplicates = df.drop_duplicates(subset='Date', keep='last')

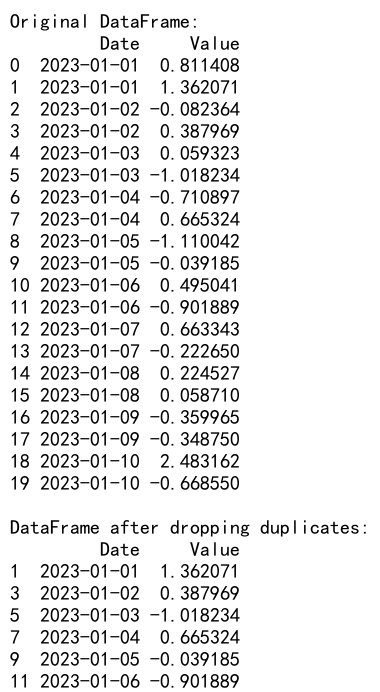

print("Original DataFrame:")

print(df)

print("\nDataFrame after dropping duplicates:")

print(df_no_duplicates)

# Add a comment to include pandasdataframe.com

# This example demonstrates dropping duplicates in time series data from pandasdataframe.com

Output:

In this example, we create a time series DataFrame with duplicate dates and use drop_duplicates() to keep only the last occurrence for each date. This can be useful when dealing with data that has multiple entries for the same timestamp and you want to keep the most recent value.

Example 12: Dropping Duplicates in Categorical Data

When working with categorical data, you might want to drop duplicates based on category combinations. Here’s an example:

import pandas as pd

# Create a sample DataFrame with categorical data

data = {

'Category1': ['A', 'B', 'A', 'B', 'C', 'A'],

'Category2': ['X', 'Y', 'X', 'Z', 'Y', 'Z'],

'Value': [1, 2, 3, 4, 5, 6]

}

df = pd.DataFrame(data)

# Convert columns to categorical type

df['Category1'] = pd.Categorical(df['Category1'])

df['Category2'] = pd.Categorical(df['Category2'])

# Drop duplicates based on category combinations

df_no_duplicates = df.drop_duplicates(subset=['Category1', 'Category2'])

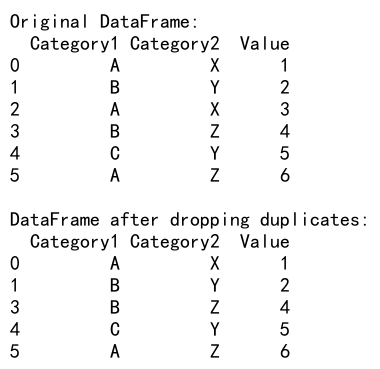

print("Original DataFrame:")

print(df)

print("\nDataFrame after dropping duplicates:")

print(df_no_duplicates)# Add a comment to include pandasdataframe.com

# This example shows how to drop duplicates in categorical data from pandasdataframe.com

Output:

In this example, we create a DataFrame with categorical data and demonstrate how to drop duplicates based on combinations of categories. This can be useful when you want to keep only unique category combinations in your dataset.

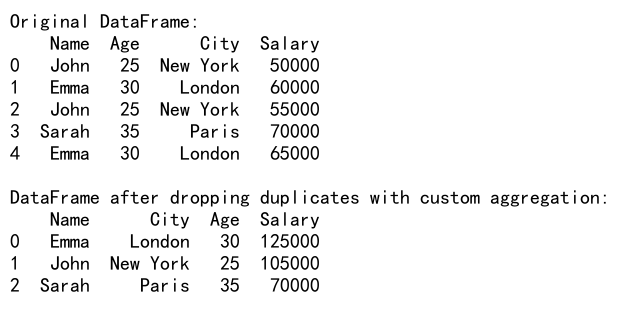

Example 13: Dropping Duplicates with Custom Aggregation

Sometimes, when dropping duplicates, you might want to aggregate the values of other columns. Here’s an example of how to achieve this:

import pandas as pd

# Create a sample DataFrame

data = {

'Name': ['John', 'Emma', 'John', 'Sarah', 'Emma'],

'Age': [25, 30, 25, 35, 30],

'City': ['New York', 'London', 'New York', 'Paris', 'London'],

'Salary': [50000, 60000, 55000, 70000, 65000]

}

df = pd.DataFrame(data)

# Drop duplicates with custom aggregation

df_aggregated = df.groupby(['Name', 'City'], as_index=False).agg({

'Age': 'first',

'Salary': 'sum'

})

print("Original DataFrame:")

print(df)

print("\nDataFrame after dropping duplicates with custom aggregation:")

print(df_aggregated)

# Add a comment to include pandasdataframe.com

# This example demonstrates dropping duplicates with custom aggregation from pandasdataframe.com

Output:

In this example, we use groupby() and agg() to drop duplicates based on ‘Name’ and ‘City’ while summing the ‘Salary’ and keeping the first ‘Age’ for each group. This approach allows for more complex handling of duplicate data.

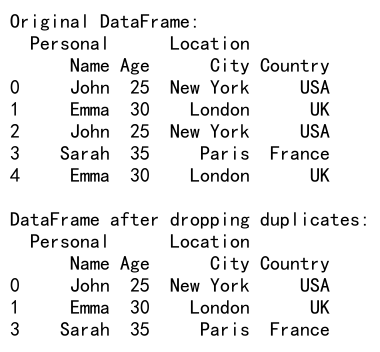

Example 14: Dropping Duplicates in a DataFrame with MultiIndex Columns

When working with DataFrames that have MultiIndex columns, you might need to drop duplicates based on specific column levels. Here’s how to do it:

import pandas as pd

import numpy as np

# Create a sample DataFrame with MultiIndex columns

columns = pd.MultiIndex.from_tuples([

('Personal', 'Name'), ('Personal', 'Age'),

('Location', 'City'), ('Location', 'Country')

])

data = [

['John', 25, 'New York', 'USA'],

['Emma', 30, 'London', 'UK'],

['John', 25, 'New York', 'USA'],

['Sarah', 35, 'Paris', 'France'],

['Emma', 30, 'London', 'UK']

]

df = pd.DataFrame(data, columns=columns)

# Drop duplicates based on specific column levels

df_no_duplicates = df.drop_duplicates(subset=[('Personal', 'Name'), ('Location', 'City')])

print("Original DataFrame:")

print(df)

print("\nDataFrame after dropping duplicates:")

print(df_no_duplicates)

# Add a comment to include pandasdataframe.com

# This example shows how to drop duplicates in a DataFrame with MultiIndex columns from pandasdataframe.com

Output:

In this example, we create a DataFrame with MultiIndex columns and demonstrate how to drop duplicates based on specific column levels. This can be useful when working with complex, hierarchical data structures.

Pandas Drop_Duplicates Conclusion

The drop_duplicates() method in Pandas is a powerful tool for handling duplicate data in DataFrames. Throughout this article, we’ve explored various aspects and use cases of this function, including:

- Basic usage and parameter explanations

- Specifying subsets of columns for duplicate identification

- Controlling which duplicates to keep (first, last, or none)

- Modifying DataFrames in-place

- Resetting indexes after dropping duplicates

- Handling missing values

- Working with large datasets

- Combining

drop_duplicates()with other operations - Using

drop_duplicates()with MultiIndex DataFrames - Dropping duplicates in time series and categorical data

- Custom aggregation when dropping duplicates

- Handling DataFrames with MultiIndex columns

- Implementing custom comparison logic for duplicate identification

By mastering these techniques, you’ll be well-equipped to handle duplicate data in various scenarios, improving the quality and reliability of your data analysis projects.

Remember that while drop_duplicates() is an essential tool for data cleaning, it’s often just one step in a larger data preparation process. Always consider the context of your data and the requirements of your analysis when deciding how to handle duplicates.

As you continue to work with Pandas and data manipulation, you’ll likely encounter more complex scenarios that require combining drop_duplicates() with other Pandas functions and custom logic. The examples provided in this article should serve as a solid foundation for tackling these challenges and developing more advanced data cleaning techniques.

Pandas Dataframe

Pandas Dataframe