Pandas dropna

Pandas is a powerful data manipulation library in Python, and one of its most useful features is the ability to handle missing data. The dropna() function is a crucial tool in this regard, allowing users to remove rows or columns containing missing values from their DataFrames. This article will provide an in-depth exploration of the dropna() function, its various parameters, and how it can be used effectively in data cleaning and preprocessing tasks.

Introduction to Missing Data in Pandas

Before diving into the specifics of dropna(), it’s important to understand how Pandas represents missing data. In Pandas, missing values are typically represented by NaN (Not a Number) or None. These can occur for various reasons, such as data collection errors, incomplete records, or intentional omissions.

Missing data can significantly impact analysis and modeling, so it’s crucial to handle it appropriately. Pandas provides several methods for dealing with missing data, including:

- Dropping rows or columns with missing values

- Filling missing values with a specific value or method

- Interpolating missing values

The dropna() function falls into the first category, allowing us to remove rows or columns containing missing values from our DataFrame.

Basic Usage of dropna()

At its most basic, dropna() can be called on a DataFrame without any arguments. This will remove any row that contains at least one missing value.

Let’s look at a simple example:

import pandas as pd

import numpy as np

# Create a sample DataFrame

df = pd.DataFrame({

'A': [1, 2, np.nan, 4, 5],

'B': [5, np.nan, 7, 8, 9],

'C': [1, 2, 3, 4, 5]

})

# Drop rows with any missing values

df_cleaned = df.dropna()

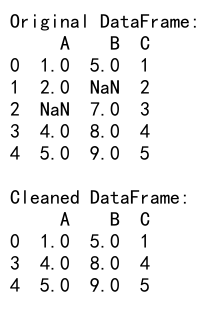

print("Original DataFrame:")

print(df)

print("\nCleaned DataFrame:")

print(df_cleaned)

Output:

In this example, we create a DataFrame with some missing values (represented by np.nan). When we call dropna() without any arguments, it removes all rows that contain at least one missing value. The resulting DataFrame df_cleaned will only contain rows where all columns have non-null values.

Controlling Axis with dropna()

By default, dropna() operates on rows (axis 0). However, we can also use it to drop columns with missing values by specifying axis=1.

Here’s an example:

import pandas as pd

import numpy as np

# Create a sample DataFrame

df = pd.DataFrame({

'A': [1, 2, np.nan, 4, 5],

'B': [5, np.nan, 7, 8, 9],

'C': [1, 2, 3, 4, 5],

'D': [np.nan, np.nan, np.nan, np.nan, np.nan]

})

# Drop columns with any missing values

df_cleaned = df.dropna(axis=1)

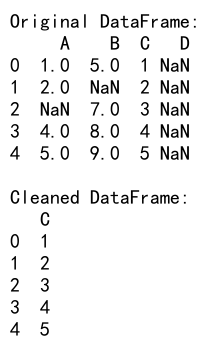

print("Original DataFrame:")

print(df)

print("\nCleaned DataFrame:")

print(df_cleaned)

Output:

In this example, we’ve added a column ‘D’ that contains only missing values. By calling dropna(axis=1), we remove any columns that contain at least one missing value. The resulting DataFrame df_cleaned will not include column ‘D’, and may not include column ‘A’ or ‘B’ if they contain any missing values.

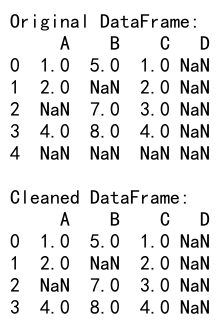

Using the ‘how’ Parameter

The how parameter in dropna() allows us to specify the condition for dropping rows or columns. It can take two values:

- ‘any’: Drop if any NA values are present (default)

- ‘all’: Drop only if all values are NA

Let’s see how this works:

import pandas as pd

import numpy as np

# Create a sample DataFrame

df = pd.DataFrame({

'A': [1, 2, np.nan, 4, np.nan],

'B': [5, np.nan, 7, 8, np.nan],

'C': [1, 2, 3, 4, np.nan]

})

# Drop rows where all values are NA

df_cleaned_all = df.dropna(how='all')

# Drop rows where any value is NA

df_cleaned_any = df.dropna(how='any')

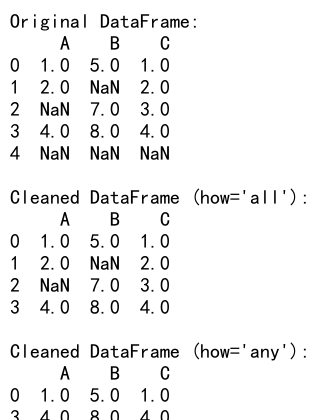

print("Original DataFrame:")

print(df)

print("\nCleaned DataFrame (how='all'):")

print(df_cleaned_all)

print("\nCleaned DataFrame (how='any'):")

print(df_cleaned_any)

Output:

In this example, we demonstrate the difference between how='all' and how='any'. With how='all', only rows where all values are NA will be dropped. With how='any', any row containing at least one NA value will be dropped.

The ‘thresh’ Parameter

The thresh parameter allows us to specify a minimum number of non-null values for a row or column to be kept. This can be useful when we want to keep rows or columns that have a certain amount of valid data.

Here’s an example:

import pandas as pd

import numpy as np

# Create a sample DataFrame

df = pd.DataFrame({

'A': [1, 2, np.nan, 4, np.nan],

'B': [5, np.nan, 7, 8, np.nan],

'C': [1, 2, 3, 4, np.nan],

'D': [np.nan, np.nan, np.nan, np.nan, np.nan]

})

# Keep rows with at least 2 non-null values

df_cleaned = df.dropna(thresh=2)

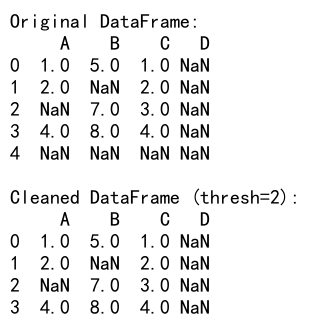

print("Original DataFrame:")

print(df)

print("\nCleaned DataFrame (thresh=2):")

print(df_cleaned)

Output:

In this example, we keep only the rows that have at least 2 non-null values. This allows us to retain rows that have some missing data, but still contain a significant amount of valid information.

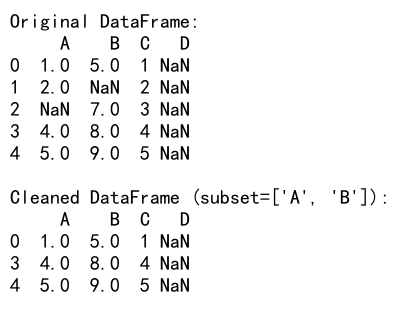

The ‘subset’ Parameter

The subset parameter allows us to consider only specific columns when deciding whether to drop a row. This can be useful when we want to focus on certain variables that are particularly important for our analysis.

Here’s an example:

import pandas as pd

import numpy as np

# Create a sample DataFrame

df = pd.DataFrame({

'A': [1, 2, np.nan, 4, 5],

'B': [5, np.nan, 7, 8, 9],

'C': [1, 2, 3, 4, 5],

'D': [np.nan, np.nan, np.nan, np.nan, np.nan]

})

# Drop rows with missing values in columns A and B

df_cleaned = df.dropna(subset=['A', 'B'])

print("Original DataFrame:")

print(df)

print("\nCleaned DataFrame (subset=['A', 'B']):")

print(df_cleaned)

Output:

In this example, we only consider columns ‘A’ and ‘B’ when deciding whether to drop a row. Rows with missing values in other columns (like ‘D’) will be kept as long as ‘A’ and ‘B’ have non-null values.

Combining Parameters

The real power of dropna() comes from combining its various parameters to achieve precise control over which rows or columns are dropped. Let’s look at a more complex example:

import pandas as pd

import numpy as np

# Create a sample DataFrame

df = pd.DataFrame({

'A': [1, 2, np.nan, 4, np.nan],

'B': [5, np.nan, 7, 8, np.nan],

'C': [1, 2, 3, 4, np.nan],

'D': [np.nan, np.nan, np.nan, np.nan, np.nan]

})

# Drop rows with less than 2 non-null values in columns A, B, and C

df_cleaned = df.dropna(subset=['A', 'B', 'C'], thresh=2)

print("Original DataFrame:")

print(df)

print("\nCleaned DataFrame:")

print(df_cleaned)

Output:

In this example, we’re combining the subset and thresh parameters. We’re only considering columns ‘A’, ‘B’, and ‘C’, and we’re keeping rows that have at least 2 non-null values in these columns.

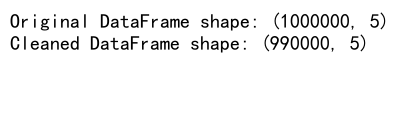

Handling Large Datasets

When working with large datasets, it’s important to consider the performance implications of dropna(). Dropping rows or columns can be a computationally expensive operation, especially on very large DataFrames.

Here’s an example of how to use dropna() efficiently with a large dataset:

import pandas as pd

import numpy as np

# Create a large DataFrame

np.random.seed(0)

large_df = pd.DataFrame(np.random.randn(1000000, 5), columns=['A', 'B', 'C', 'D', 'E'])

# Introduce some missing values

large_df.iloc[::100, 0] = np.nan

large_df.iloc[::500, 1] = np.nan

# Use dropna with a subset of columns for better performance

df_cleaned = large_df.dropna(subset=['A', 'B'])

print(f"Original DataFrame shape: {large_df.shape}")

print(f"Cleaned DataFrame shape: {df_cleaned.shape}")

Output:

In this example, we create a large DataFrame with 1 million rows and introduce some missing values. By using the subset parameter to focus on only the columns we’re interested in, we can improve the performance of dropna().

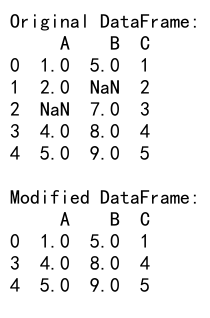

Inplace Parameter

The inplace parameter in dropna() allows us to modify the original DataFrame instead of creating a new one. This can be useful for saving memory when working with large datasets.

Here’s an example:

import pandas as pd

import numpy as np

# Create a sample DataFrame

df = pd.DataFrame({

'A': [1, 2, np.nan, 4, 5],

'B': [5, np.nan, 7, 8, 9],

'C': [1, 2, 3, 4, 5]

})

print("Original DataFrame:")

print(df)

# Drop rows with missing values in-place

df.dropna(inplace=True)

print("\nModified DataFrame:")

print(df)

Output:

In this example, instead of creating a new DataFrame, we modify the original df in-place by setting inplace=True.

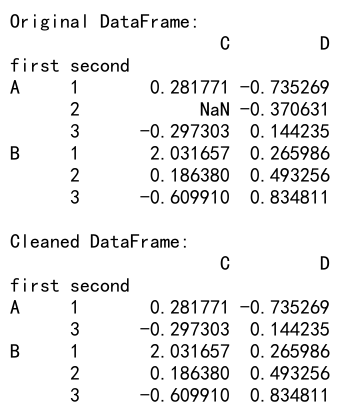

Using dropna() with MultiIndex

When working with MultiIndex DataFrames, dropna() behaves slightly differently. By default, it will drop a row if any element in the row is missing.

Here’s an example:

import pandas as pd

import numpy as np

# Create a MultiIndex DataFrame

index = pd.MultiIndex.from_product([['A', 'B'], [1, 2, 3]],

names=['first', 'second'])

df = pd.DataFrame(np.random.randn(6, 2), index=index, columns=['C', 'D'])

df.loc[('A', 2), 'C'] = np.nan

print("Original DataFrame:")

print(df)

# Drop rows with any missing values

df_cleaned = df.dropna()

print("\nCleaned DataFrame:")

print(df_cleaned)

Output:

In this example, we create a MultiIndex DataFrame and introduce a missing value. When we call dropna(), it removes the entire row corresponding to the MultiIndex (‘A’, 2).

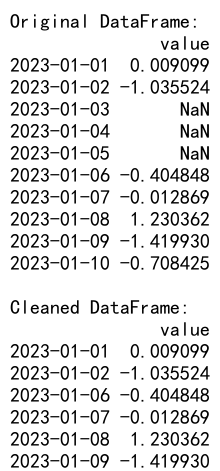

Using dropna() with Time Series Data

When working with time series data, dropna() can be particularly useful for handling missing time periods. Here’s an example:

import pandas as pd

import numpy as np

# Create a time series DataFrame

dates = pd.date_range('20230101', periods=10)

df = pd.DataFrame({'value': np.random.randn(10)}, index=dates)

# Introduce some missing values

df.loc['2023-01-03':'2023-01-05', 'value'] = np.nan

print("Original DataFrame:")

print(df)

# Drop rows with missing values

df_cleaned = df.dropna()

print("\nCleaned DataFrame:")

print(df_cleaned)

Output:

In this example, we create a time series DataFrame and introduce some missing values for specific dates. Using dropna() removes these dates from the DataFrame, potentially creating gaps in the time series.

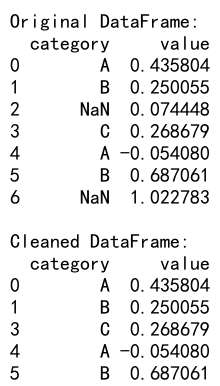

Handling Missing Values in Categorical Data

When working with categorical data, dropna() can be used to remove categories with missing values. Here’s an example:

import pandas as pd

import numpy as np

# Create a DataFrame with categorical data

df = pd.DataFrame({

'category': pd.Categorical(['A', 'B', np.nan, 'C', 'A', 'B', np.nan]),

'value': np.random.randn(7)

})

print("Original DataFrame:")

print(df)

# Drop rows with missing categories

df_cleaned = df.dropna()

print("\nCleaned DataFrame:")

print(df_cleaned)

Output:

In this example, we create a DataFrame with a categorical column that includes some missing values. Using dropna() removes the rows where the category is missing.

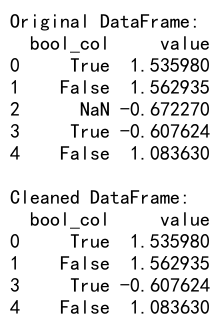

Using dropna() with Boolean Data

Boolean columns in Pandas can also contain missing values. Here’s how dropna() handles these:

import pandas as pd

import numpy as np

# Create a DataFrame with boolean data

df = pd.DataFrame({

'bool_col': [True, False, np.nan, True, False],

'value': np.random.randn(5)

})

print("Original DataFrame:")

print(df)

# Drop rows with missing boolean values

df_cleaned = df.dropna()

print("\nCleaned DataFrame:")

print(df_cleaned)

Output:

In this example, we create a DataFrame with a boolean column that includes a missing value. dropna() removes the row with the missing boolean value.

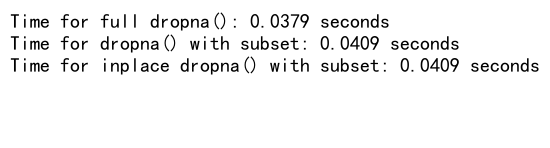

Performance Considerations

When working with very large datasets, the performance of dropna() can become a concern. Here are some tips to optimize its use:

- Use the

subsetparameter to focus on specificcolumns when possible. - Consider using

inplace=Trueto avoid creating a new DataFrame. - If you’re only interested in removing rows with all missing values, use

how='all'.

Here’s an example that demonstrates these optimization techniques:

import pandas as pd

import numpy as np

import time

# Create a large DataFrame

np.random.seed(0)

large_df = pd.DataFrame(np.random.randn(1000000, 5), columns=['A', 'B', 'C', 'D', 'E'])

# Introduce some missing values

large_df.iloc[::100, 0] = np.nan

large_df.iloc[::500, 1] = np.nan

# Measure time for different approaches

start_time = time.time()

df_cleaned1 = large_df.dropna()

time1 = time.time() - start_time

start_time = time.time()

df_cleaned2 = large_df.dropna(subset=['A', 'B'])

time2 = time.time() - start_time

start_time = time.time()

large_df.dropna(subset=['A', 'B'], inplace=True)

time3 = time.time() - start_time

print(f"Time for full dropna(): {time1:.4f} seconds")

print(f"Time for dropna() with subset: {time2:.4f} seconds")

print(f"Time for inplace dropna() with subset: {time3:.4f} seconds")

Output:

This example demonstrates how using the subset parameter and inplace=True can significantly improve performance when working with large datasets.

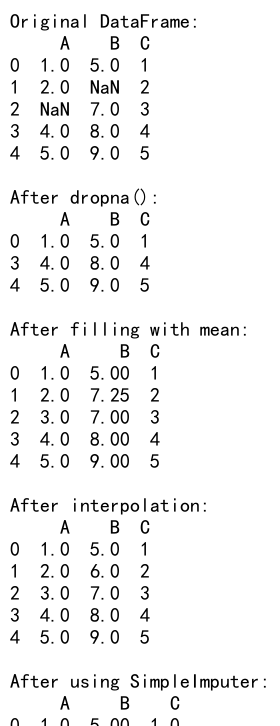

Alternatives to dropna()

While dropna() is a powerful tool for handling missing data, it’s not always the best solution. Sometimes, dropping rows or columns can lead to a significant loss of data. Here are some alternatives to consider:

- Filling missing values with a specific value (e.g., mean, median, mode)

- Interpolating missing values

- Using machine learning techniques to predict missing values

Let’s look at an example that compares dropna() with some of these alternatives:

import pandas as pd

import numpy as np

from sklearn.impute import SimpleImputer

# Create a sample DataFrame

df = pd.DataFrame({

'A': [1, 2, np.nan, 4, 5],

'B': [5, np.nan, 7, 8, 9],

'C': [1, 2, 3, 4, 5]

})

print("Original DataFrame:")

print(df)

# Method 1: dropna()

df_dropped = df.dropna()

# Method 2: Fill with mean

df_mean = df.fillna(df.mean())

# Method 3: Interpolate

df_interp = df.interpolate()

# Method 4: Use SimpleImputer from sklearn

imputer = SimpleImputer(strategy='mean')

df_imputed = pd.DataFrame(imputer.fit_transform(df), columns=df.columns)

print("\nAfter dropna():")

print(df_dropped)

print("\nAfter filling with mean:")

print(df_mean)

print("\nAfter interpolation:")

print(df_interp)

print("\nAfter using SimpleImputer:")

print(df_imputed)

Output:

This example demonstrates different approaches to handling missing data, including dropna(), filling with mean values, interpolation, and using scikit-learn’s SimpleImputer.

Pandas dropna Conclusion

The dropna() function in Pandas is a versatile tool for handling missing data in DataFrames. Its flexibility allows for precise control over which rows or columns are removed based on missing values. Key points to remember include:

- By default,

dropna()removes rows with any missing values. - The

axisparameter allows you to drop rows (axis=0) or columns (axis=1). - The

howparameter lets you specify whether to drop when any or all values are missing. - The

threshparameter allows you to set a minimum number of non-null values for a row or column to be kept. - The

subsetparameter allows you to focus on specific columns when deciding whether to drop a row. - The

inplaceparameter allows you to modify the original DataFrame instead of creating a new one.

While dropna() is powerful, it’s important to use it judiciously. Dropping too much data can lead to loss of important information or introduce bias into your analysis. Always consider the nature of your data and the requirements of your analysis when deciding how to handle missing values.

In many real-world scenarios, a combination of techniques including dropna(), imputation, and domain-specific approaches may be necessary to effectively handle missing data. The key is to understand your data, the reasons for missing values, and the potential impact of different missing data handling strategies on your analysis.

Remember, the goal of handling missing data is not just to remove it, but to ensure that your dataset is as complete and accurate as possible for your specific analytical needs. Use dropna() as part of a thoughtful, comprehensive approach to data cleaning and preparation.

Pandas Dataframe

Pandas Dataframe