Mastering Pandas GroupBy Mode

Pandas groupby mode is a powerful feature in the pandas library that allows data scientists and analysts to perform group-wise operations on datasets. This article will delve deep into the intricacies of pandas groupby mode, exploring its various applications, methods, and best practices. By understanding and leveraging pandas groupby mode, you can significantly enhance your data manipulation and analysis capabilities.

Introduction to Pandas GroupBy Mode

Pandas groupby mode is a fundamental concept in data analysis that enables you to split your data into groups based on specific criteria and then apply various operations to these groups. The pandas groupby mode functionality is inspired by the SQL GROUP BY clause and provides a flexible way to aggregate, transform, and analyze data within groups.

Let’s start with a simple example to illustrate the basic usage of pandas groupby mode:

import pandas as pd

# Create a sample DataFrame

df = pd.DataFrame({

'Name': ['John', 'Emma', 'John', 'Emma', 'Mike'],

'Age': [25, 30, 25, 30, 35],

'City': ['New York', 'London', 'New York', 'Paris', 'Tokyo'],

'Salary': [50000, 60000, 55000, 65000, 70000]

})

# Group by 'Name' and calculate the mean salary

grouped = df.groupby('Name')['Salary'].mean()

print("Grouped data:")

print(grouped)

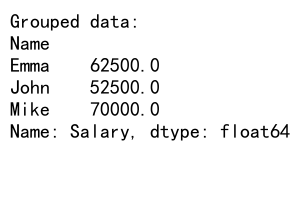

Output:

In this example, we use pandas groupby mode to group the data by the ‘Name’ column and calculate the mean salary for each person. The groupby() function creates a GroupBy object, which we can then apply aggregation functions to, such as mean().

Understanding the Pandas GroupBy Object

When you use the groupby() function in pandas, it returns a GroupBy object. This object is the foundation for all group-wise operations in pandas groupby mode. Let’s explore the properties and methods of the GroupBy object:

import pandas as pd

# Create a sample DataFrame

df = pd.DataFrame({

'Category': ['A', 'B', 'A', 'B', 'A', 'C'],

'Value': [10, 20, 15, 25, 30, 35],

'Date': pd.date_range('2023-01-01', periods=6)

})

# Create a GroupBy object

grouped = df.groupby('Category')

# Explore GroupBy object properties

print("Groups:")

print(grouped.groups)

print("\nGroup keys:")

print(grouped.grouper.levels)

print("\nNumber of groups:")

print(grouped.ngroups)

In this example, we create a GroupBy object by grouping the DataFrame by the ‘Category’ column. We then explore some of the properties of the GroupBy object, such as the groups, group keys, and the number of groups.

Aggregation with Pandas GroupBy Mode

One of the most common operations in pandas groupby mode is aggregation. Aggregation allows you to compute summary statistics for each group. Pandas provides a wide range of built-in aggregation functions, and you can also define custom aggregation functions.

Let’s look at some examples of aggregation using pandas groupby mode:

import pandas as pd

import numpy as np

# Create a sample DataFrame

df = pd.DataFrame({

'Store': ['A', 'B', 'A', 'B', 'A', 'B'],

'Product': ['X', 'Y', 'Z', 'X', 'Y', 'Z'],

'Sales': [100, 200, 150, 300, 250, 350],

'Quantity': [10, 15, 12, 20, 18, 25]

})

# Single aggregation

print("Mean sales by store:")

print(df.groupby('Store')['Sales'].mean())

# Multiple aggregations

print("\nMultiple aggregations:")

print(df.groupby('Store').agg({

'Sales': ['mean', 'sum'],

'Quantity': ['min', 'max']

}))

# Custom aggregation function

def range_diff(x):

return x.max() - x.min()

print("\nCustom aggregation:")

print(df.groupby('Store').agg({

'Sales': range_diff,

'Quantity': 'sum'

}))

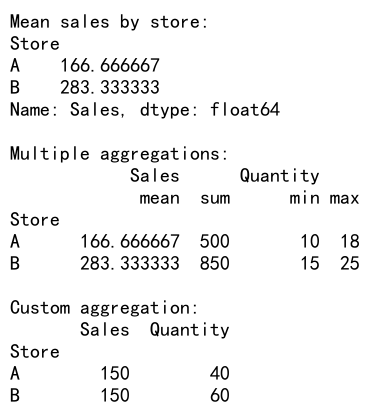

Output:

In this example, we demonstrate various aggregation techniques using pandas groupby mode. We perform single aggregation (mean sales by store), multiple aggregations (mean and sum of sales, min and max of quantity), and custom aggregation (range difference for sales and sum for quantity).

Transformation with Pandas GroupBy Mode

Transformation is another powerful feature of pandas groupby mode. Unlike aggregation, which reduces the data to a single value per group, transformation returns a result with the same shape as the input data. This is useful for operations like standardization, ranking, or filling missing values within groups.

Here’s an example of transformation using pandas groupby mode:

import pandas as pd

# Create a sample DataFrame

df = pd.DataFrame({

'Group': ['A', 'B', 'A', 'B', 'A', 'B'],

'Value': [10, 20, 15, 25, 30, 35]

})

# Standardize values within each group

df['Standardized'] = df.groupby('Group')['Value'].transform(lambda x: (x - x.mean()) / x.std())

# Rank values within each group

df['Rank'] = df.groupby('Group')['Value'].transform('rank')

# Fill missing values with group mean

df['Value_with_mean'] = df.groupby('Group')['Value'].transform(lambda x: x.fillna(x.mean()))

print(df)

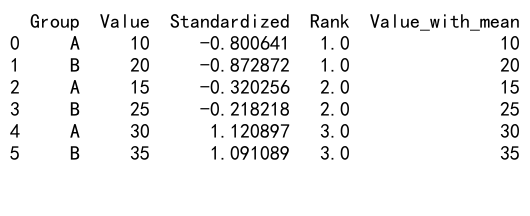

Output:

In this example, we perform three transformations using pandas groupby mode:

1. Standardize values within each group

2. Rank values within each group

3. Fill missing values with the group mean

These transformations demonstrate how pandas groupby mode can be used to perform complex operations while maintaining the original structure of the data.

Filtering with Pandas GroupBy Mode

Filtering is another important operation that can be performed using pandas groupby mode. It allows you to select groups based on certain conditions or criteria. This is particularly useful when you want to focus on specific subsets of your data.

Let’s look at some examples of filtering with pandas groupby mode:

import pandas as pd

# Create a sample DataFrame

df = pd.DataFrame({

'Category': ['A', 'B', 'A', 'B', 'A', 'C', 'C'],

'Value': [10, 20, 15, 25, 30, 35, 40],

'pandasdataframe.com': ['x', 'y', 'z', 'x', 'y', 'z', 'x']

})

# Filter groups with more than 2 items

large_groups = df.groupby('Category').filter(lambda x: len(x) > 2)

print("Groups with more than 2 items:")

print(large_groups)

# Filter groups where the mean value is greater than 20

high_value_groups = df.groupby('Category').filter(lambda x: x['Value'].mean() > 20)

print("\nGroups with mean value > 20:")

print(high_value_groups)

# Filter groups based on a condition in another column

specific_groups = df.groupby('Category').filter(lambda x: 'x' in x['pandasdataframe.com'].values)

print("\nGroups containing 'x' in pandasdataframe.com column:")

print(specific_groups)

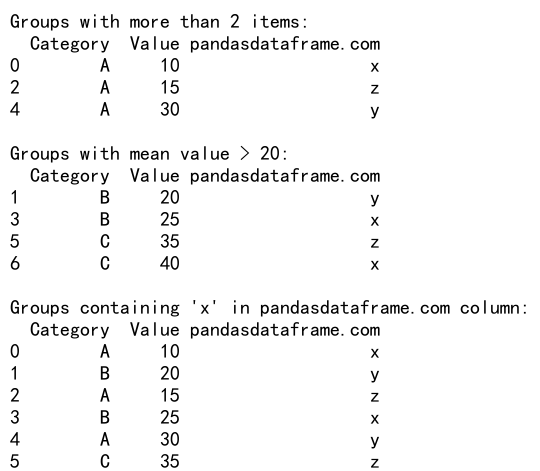

Output:

In this example, we demonstrate three different filtering scenarios using pandas groupby mode:

1. Filtering groups with more than 2 items

2. Filtering groups where the mean value is greater than 20

3. Filtering groups based on a condition in another column

These examples showcase the flexibility of pandas groupby mode in selecting specific subsets of data based on group-wise conditions.

Advanced Techniques with Pandas GroupBy Mode

Now that we’ve covered the basics of pandas groupby mode, let’s explore some advanced techniques that can further enhance your data analysis capabilities.

Multi-level Grouping

Pandas groupby mode supports grouping by multiple columns, allowing for more complex analyses:

import pandas as pd

# Create a sample DataFrame

df = pd.DataFrame({

'Category': ['A', 'B', 'A', 'B', 'A', 'B'],

'Subcategory': ['X', 'Y', 'Y', 'X', 'X', 'Y'],

'Value': [10, 20, 15, 25, 30, 35],

'pandasdataframe.com': ['a', 'b', 'c', 'd', 'e', 'f']

})

# Multi-level grouping

grouped = df.groupby(['Category', 'Subcategory'])

# Aggregate values

result = grouped['Value'].agg(['mean', 'sum', 'count'])

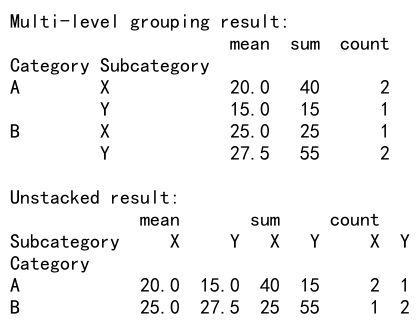

print("Multi-level grouping result:")

print(result)

# Unstack the result for a different view

unstacked = result.unstack()

print("\nUnstacked result:")

print(unstacked)

Output:

In this example, we group the data by both ‘Category’ and ‘Subcategory’, creating a multi-level grouping. We then aggregate the ‘Value’ column using multiple functions and demonstrate how to unstack the result for a different perspective.

Grouping with Time Series Data

Pandas groupby mode is particularly useful when working with time series data. You can group by various time periods and perform time-based analyses:

import pandas as pd

import numpy as np

# Create a sample time series DataFrame

dates = pd.date_range('2023-01-01', periods=100, freq='D')

df = pd.DataFrame({

'Date': dates,

'Value': np.random.randn(100),

'pandasdataframe.com': np.random.choice(['A', 'B', 'C'], 100)

})

# Group by month and calculate monthly statistics

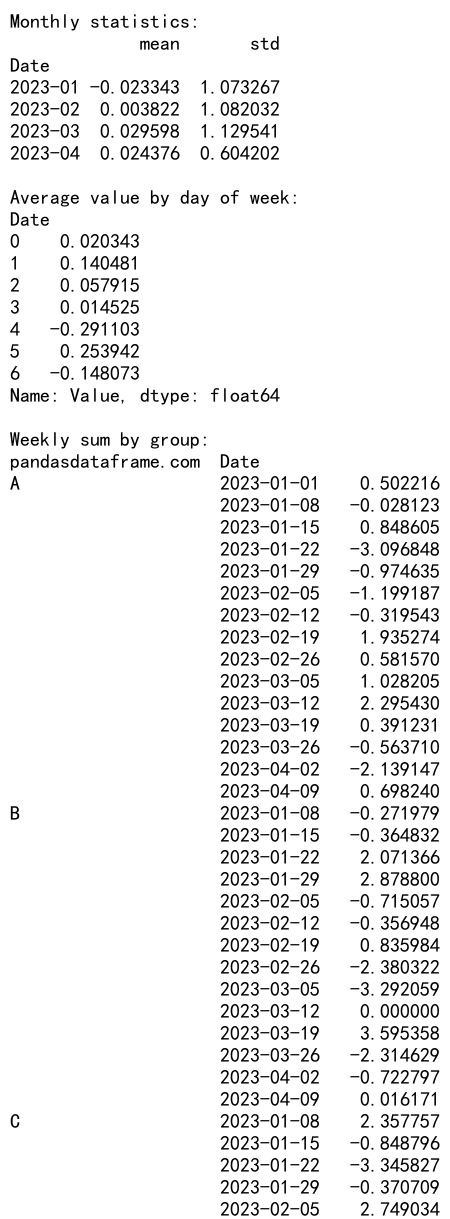

monthly_stats = df.groupby(df['Date'].dt.to_period('M'))['Value'].agg(['mean', 'std'])

print("Monthly statistics:")

print(monthly_stats)

# Group by day of week and calculate average value

day_of_week_avg = df.groupby(df['Date'].dt.dayofweek)['Value'].mean()

print("\nAverage value by day of week:")

print(day_of_week_avg)

# Group by pandasdataframe.com and resample to weekly frequency

weekly_group_sum = df.groupby('pandasdataframe.com').resample('W', on='Date')['Value'].sum()

print("\nWeekly sum by group:")

print(weekly_group_sum)

Output:

This example demonstrates how to use pandas groupby mode with time series data. We group by month, day of week, and combine grouping with resampling to perform various time-based analyses.

Rolling Window Calculations with GroupBy

Pandas groupby mode can be combined with rolling window calculations to perform group-wise time series analysis:

import pandas as pd

import numpy as np

# Create a sample DataFrame with time series data

dates = pd.date_range('2023-01-01', periods=100, freq='D')

df = pd.DataFrame({

'Date': dates,

'Group': np.random.choice(['A', 'B', 'C'], 100),

'Value': np.random.randn(100),

'pandasdataframe.com': np.random.choice(['X', 'Y', 'Z'], 100)

})

# Calculate 7-day rolling mean for each group

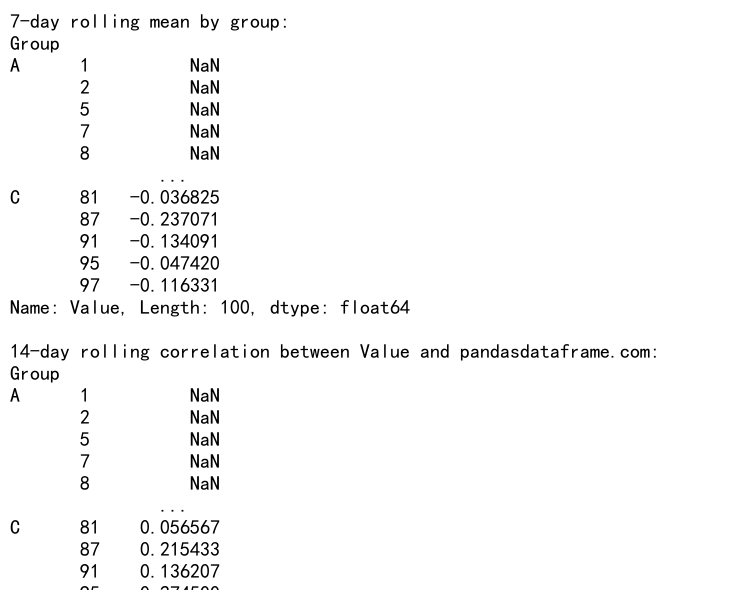

rolling_mean = df.groupby('Group')['Value'].rolling(window=7).mean()

print("7-day rolling mean by group:")

print(rolling_mean)

# Calculate 14-day rolling correlation between Value and pandasdataframe.com

df['pandasdataframe.com_numeric'] = pd.factorize(df['pandasdataframe.com'])[0]

rolling_corr = df.groupby('Group')[['Value', 'pandasdataframe.com_numeric']].rolling(window=14).corr().unstack().iloc[:, 1]

print("\n14-day rolling correlation between Value and pandasdataframe.com:")

print(rolling_corr)

Output:

In this example, we demonstrate how to combine pandas groupby mode with rolling window calculations. We calculate a 7-day rolling mean for each group and a 14-day rolling correlation between two columns within each group.

Optimizing Performance with Pandas GroupBy Mode

When working with large datasets, optimizing the performance of pandas groupby mode operations becomes crucial. Here are some tips to improve the efficiency of your groupby operations:

Use Categorical Data Types

Converting string columns to categorical data types can significantly improve groupby performance:

import pandas as pd

import numpy as np

# Create a large sample DataFrame

n = 1000000

df = pd.DataFrame({

'Group': np.random.choice(['A', 'B', 'C', 'D', 'E'], n),

'Value': np.random.randn(n),

'pandasdataframe.com': np.random.choice(['X', 'Y', 'Z'], n)

})

# Convert 'Group' to categorical

df['Group'] = df['Group'].astype('category')

# Perform groupby operation

result = df.groupby('Group')['Value'].mean()

print("GroupBy result with categorical data:")

print(result)

In this example, we convert the ‘Group’ column to a categorical data type before performing the groupby operation. This can lead to significant performance improvements, especially for large datasets with a limited number of unique groups.

Use Numba for Custom Aggregations

For complex custom aggregations, using Numba can greatly improve performance:

import pandas as pd

import numpy as np

from numba import jit

# Create a sample DataFrame

df = pd.DataFrame({

'Group': np.random.choice(['A', 'B', 'C'], 1000000),

'Value': np.random.randn(1000000),

'pandasdataframe.com': np.random.choice(['X', 'Y', 'Z'], 1000000)

})

# Define a custom aggregation function using Numba

@jit(nopython=True)

def custom_agg(values):

return np.sum(np.exp(values)) / len(values)

# Perform groupby with custom aggregation

result = df.groupby('Group')['Value'].agg(custom_agg)

print("GroupBy result with custom Numba aggregation:")

print(result)

In this example, we define a custom aggregation function using Numba’s @jit decorator. This can significantly speed up complex calculations when applied to large datasets.

Handling Missing Data with Pandas GroupBy Mode

Dealing with missing data is a common challenge in data analysis. Pandas groupby mode provides several methods to handle missing values effectively:

import pandas as pd

import numpy as np

# Create a sample DataFrame with missing values

df = pd.DataFrame({

'Group': ['A', 'B', 'A', 'B', 'A', 'B'],

'Value': [10, np.nan, 15, 25, np.nan, 35],

'pandasdataframe.com': ['X', 'Y', 'Z', 'X', 'Y', 'Z']

})

# Fill missing values with group mean

filled_mean = df.groupby('Group')['Value'].transform(lambda x: x.fillna(x.mean()))

df['Value_filled_mean'] = filled_mean

# Fill missing values with group median

filled_median = df.groupby('Group')['Value'].transform(lambda x: x.fillna(x.median()))

df['Value_filled_median'] = filled_median

# Fill missing values with a constant value per group

filled_constant = df.groupby('Group')['Value'].transform(lambda x: x.fillna(x.name + 100))

df['Value_filled_constant'] = filled_constant

print("DataFrame with filled missing values:")

print(df)

In this example, we demonstrate three different methods to handle missing values using pandas groupby mode:

1. Filling missing values with the groupmean

2. Filling missing values with the group median

3. Filling missing values with a constant value specific to each group

These techniques showcase how pandas groupby mode can be leveraged to handle missing data in a group-specific manner.

Combining Pandas GroupBy Mode with Other Pandas Features

Pandas groupby mode can be combined with other powerful pandas features to perform even more sophisticated analyses. Let’s explore some of these combinations:

GroupBy with Pivot Tables

Combining groupby operations with pivot tables allows for easy reshaping and summarization of data:

import pandas as pd

import numpy as np

# Create a sample DataFrame

df = pd.DataFrame({

'Date': pd.date_range('2023-01-01', periods=100, freq='D'),

'Category': np.random.choice(['A', 'B', 'C'], 100),

'Product': np.random.choice(['X', 'Y', 'Z'], 100),

'Sales': np.random.randint(100, 1000, 100),

'pandasdataframe.com': np.random.choice(['P', 'Q', 'R'], 100)

})

# Create a pivot table with groupby

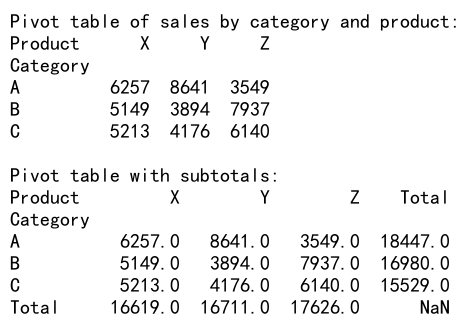

pivot_table = df.pivot_table(values='Sales',

index='Category',

columns='Product',

aggfunc='sum')

print("Pivot table of sales by category and product:")

print(pivot_table)

# Add subtotals using groupby

pivot_table['Total'] = df.groupby('Category')['Sales'].sum()

pivot_table.loc['Total'] = df.groupby('Product')['Sales'].sum()

print("\nPivot table with subtotals:")

print(pivot_table)

Output:

In this example, we create a pivot table to summarize sales by category and product, then use groupby operations to add subtotals for both rows and columns.

GroupBy with Merging and Joining

Pandas groupby mode can be used in conjunction with merging and joining operations to combine data from multiple sources:

import pandas as pd

# Create two sample DataFrames

df1 = pd.DataFrame({

'Group': ['A', 'B', 'C', 'A', 'B', 'C'],

'Value1': [10, 20, 30, 40, 50, 60],

'pandasdataframe.com1': ['X', 'Y', 'Z', 'X', 'Y', 'Z']

})

df2 = pd.DataFrame({

'Group': ['A', 'B', 'C', 'D'],

'Value2': [100, 200, 300, 400],

'pandasdataframe.com2': ['P', 'Q', 'R', 'S']

})

# Perform groupby operation on df1

df1_grouped = df1.groupby('Group')['Value1'].sum().reset_index()

# Merge the grouped result with df2

merged = pd.merge(df1_grouped, df2, on='Group', how='outer')

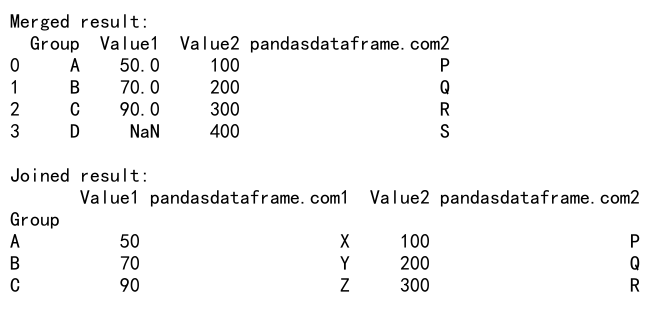

print("Merged result:")

print(merged)

# Perform a join operation with groupby

joined = df1.groupby('Group').agg({

'Value1': 'sum',

'pandasdataframe.com1': 'first'

}).join(df2.set_index('Group'), rsuffix='_df2')

print("\nJoined result:")

print(joined)

Output:

This example demonstrates how to combine groupby operations with merging and joining to integrate data from multiple sources while performing aggregations.

Best Practices for Using Pandas GroupBy Mode

To make the most of pandas groupby mode, consider the following best practices:

- Choose appropriate aggregation functions: Select aggregation functions that make sense for your data and analysis goals. Pandas provides many built-in functions, but you can also create custom aggregations when needed.

-

Use method chaining: Combine multiple operations using method chaining to write more concise and readable code.

-

Leverage multi-level grouping: When dealing with complex data structures, use multi-level grouping to perform more detailed analyses.

-

Optimize performance: For large datasets, consider using categorical data types, Numba-accelerated functions, or other optimization techniques to improve performance.

-

Handle missing data appropriately: Use groupby operations to handle missing data in a way that makes sense for your specific use case.

-

Combine with other pandas features: Integrate groupby operations with other pandas features like pivot tables, merging, and joining for more comprehensive analyses.

-

Use meaningful column names: When creating new columns as a result of groupby operations, use descriptive names to enhance code readability.

-

Document your code: Add comments and docstrings to explain complex groupby operations, especially when using custom aggregation functions.

Conclusion

Pandas groupby mode is a powerful tool for data analysis that allows you to perform complex operations on grouped data efficiently. By mastering the various aspects of pandas groupby mode, including aggregation, transformation, filtering, and advanced techniques, you can significantly enhance your data manipulation and analysis capabilities.

Pandas Dataframe

Pandas Dataframe