Mastering Pandas GroupBy and Join Operations

Pandas groupby join operations are essential tools for data analysis and manipulation in Python. This comprehensive guide will explore the intricacies of using groupby and join functions in pandas, providing detailed explanations and practical examples to help you master these powerful techniques.

Introduction to Pandas GroupBy and Join

Pandas groupby join operations are fundamental for data analysis tasks. The groupby function allows you to split your data into groups based on specific criteria, while join operations enable you to combine multiple datasets based on common columns or indices. Together, these operations provide a powerful toolkit for data manipulation and analysis.

Let’s start with a simple example to illustrate the basic concept of groupby:

import pandas as pd

# Create a sample DataFrame

df = pd.DataFrame({

'Name': ['John', 'Emma', 'John', 'Emma', 'Mike'],

'Age': [25, 30, 25, 30, 35],

'City': ['New York', 'London', 'New York', 'Paris', 'Tokyo'],

'Score': [80, 85, 90, 88, 92]

})

# Group by 'Name' and calculate the mean score

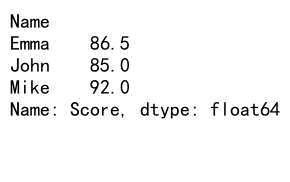

grouped = df.groupby('Name')['Score'].mean()

print(grouped)

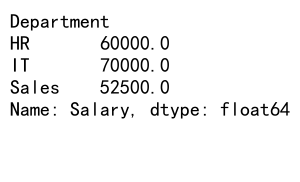

Output:

In this example, we group the DataFrame by the ‘Name’ column and calculate the mean score for each person. The groupby operation splits the data into groups based on unique names, and then we apply the mean function to the ‘Score’ column for each group.

Now, let’s look at a basic join operation:

import pandas as pd

# Create two sample DataFrames

df1 = pd.DataFrame({

'ID': [1, 2, 3, 4],

'Name': ['John', 'Emma', 'Mike', 'Sarah'],

'City': ['New York', 'London', 'Tokyo', 'Paris']

})

df2 = pd.DataFrame({

'ID': [2, 3, 4, 5],

'Age': [30, 35, 28, 32],

'Salary': [50000, 60000, 55000, 58000]

})

# Perform an inner join on the 'ID' column

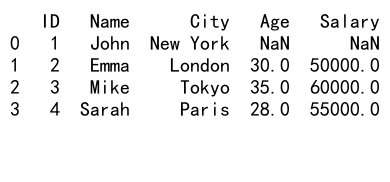

joined_df = df1.join(df2.set_index('ID'), on='ID')

print(joined_df)

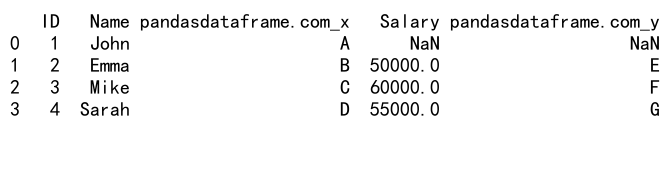

Output:

In this example, we join two DataFrames based on the ‘ID’ column. The join operation combines the data from both DataFrames where the ‘ID’ values match.

Advanced GroupBy Operations

Pandas groupby operations offer a wide range of possibilities for data analysis. Let’s explore some more advanced techniques:

Multiple Column GroupBy

You can group data by multiple columns to create more specific groups:

import pandas as pd

# Create a sample DataFrame

df = pd.DataFrame({

'Name': ['John', 'Emma', 'John', 'Emma', 'Mike'],

'Department': ['Sales', 'HR', 'Sales', 'HR', 'IT'],

'Salary': [50000, 60000, 55000, 62000, 70000],

'pandasdataframe.com': ['A', 'B', 'C', 'D', 'E']

})

# Group by 'Name' and 'Department', then calculate mean salary

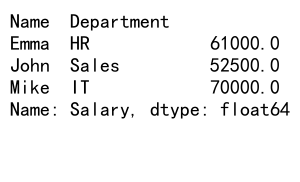

grouped = df.groupby(['Name', 'Department'])['Salary'].mean()

print(grouped)

Output:

This example groups the data by both ‘Name’ and ‘Department’, providing a more granular view of the average salaries.

Applying Multiple Aggregation Functions

You can apply multiple aggregation functions to different columns in a single groupby operation:

import pandas as pd

# Create a sample DataFrame

df = pd.DataFrame({

'Name': ['John', 'Emma', 'John', 'Emma', 'Mike'],

'Department': ['Sales', 'HR', 'Sales', 'HR', 'IT'],

'Salary': [50000, 60000, 55000, 62000, 70000],

'Age': [30, 35, 31, 36, 40],

'pandasdataframe.com': ['A', 'B', 'C', 'D', 'E']

})

# Group by 'Department' and apply multiple aggregation functions

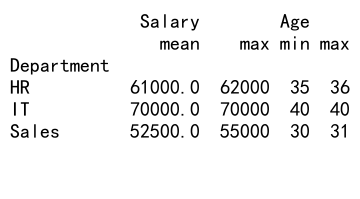

grouped = df.groupby('Department').agg({

'Salary': ['mean', 'max'],

'Age': ['min', 'max']

})

print(grouped)

Output:

This example groups the data by ‘Department’ and calculates the mean and maximum salary, as well as the minimum and maximum age for each department.

Custom Aggregation Functions

You can define and use custom aggregation functions in groupby operations:

import pandas as pd

# Create a sample DataFrame

df = pd.DataFrame({

'Name': ['John', 'Emma', 'John', 'Emma', 'Mike'],

'Score': [80, 85, 90, 88, 92],

'pandasdataframe.com': ['A', 'B', 'C', 'D', 'E']

})

# Define a custom aggregation function

def score_range(x):

return x.max() - x.min()

# Group by 'Name' and apply the custom function

grouped = df.groupby('Name')['Score'].agg(['mean', score_range])

print(grouped)

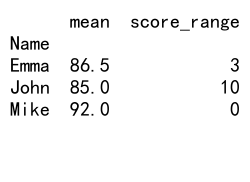

Output:

In this example, we define a custom function score_range that calculates the range of scores. We then apply this function along with the built-in mean function to our grouped data.

Advanced Join Operations

Join operations in pandas are versatile and allow for various types of data combination. Let’s explore some advanced join techniques:

Left Join

A left join returns all rows from the left DataFrame and matching rows from the right DataFrame:

import pandas as pd

# Create two sample DataFrames

df1 = pd.DataFrame({

'ID': [1, 2, 3, 4],

'Name': ['John', 'Emma', 'Mike', 'Sarah'],

'pandasdataframe.com': ['A', 'B', 'C', 'D']

})

df2 = pd.DataFrame({

'ID': [2, 3, 4, 5],

'Salary': [50000, 60000, 55000, 58000],

'pandasdataframe.com': ['E', 'F', 'G', 'H']

})

# Perform a left join

left_joined = df1.merge(df2, on='ID', how='left')

print(left_joined)

Output:

This example performs a left join on the ‘ID’ column, keeping all rows from df1 and matching rows from df2.

Outer Join

An outer join returns all rows when there is a match in either left or right DataFrame:

import pandas as pd

# Create two sample DataFrames

df1 = pd.DataFrame({

'ID': [1, 2, 3, 4],

'Name': ['John', 'Emma', 'Mike', 'Sarah'],

'pandasdataframe.com': ['A', 'B', 'C', 'D']

})

df2 = pd.DataFrame({

'ID': [2, 3, 4, 5],

'Salary': [50000, 60000, 55000, 58000],

'pandasdataframe.com': ['E', 'F', 'G', 'H']

})

# Perform an outer join

outer_joined = df1.merge(df2, on='ID', how='outer')

print(outer_joined)

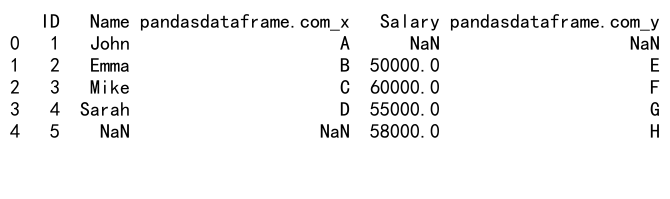

Output:

This example performs an outer join on the ‘ID’ column, keeping all rows from both DataFrames and filling in missing values with NaN.

Joining on Multiple Columns

You can join DataFrames based on multiple columns:

import pandas as pd

# Create two sample DataFrames

df1 = pd.DataFrame({

'ID': [1, 2, 3, 4],

'Name': ['John', 'Emma', 'Mike', 'Sarah'],

'Department': ['Sales', 'HR', 'IT', 'Sales'],

'pandasdataframe.com': ['A', 'B', 'C', 'D']

})

df2 = pd.DataFrame({

'ID': [2, 3, 4, 5],

'Department': ['HR', 'IT', 'Sales', 'Marketing'],

'Salary': [50000, 60000, 55000, 58000],

'pandasdataframe.com': ['E', 'F', 'G', 'H']

})

# Perform a join on multiple columns

multi_joined = df1.merge(df2, on=['ID', 'Department'], how='inner')

print(multi_joined)

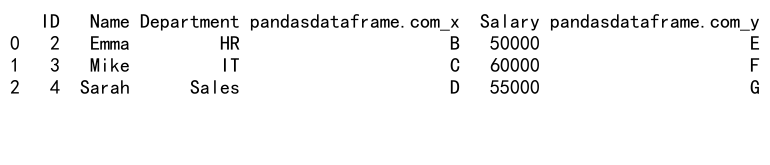

Output:

This example joins the two DataFrames based on both the ‘ID’ and ‘Department’ columns, providing a more specific match.

Combining GroupBy and Join Operations

The real power of pandas comes from combining groupby and join operations to perform complex data analysis tasks. Let’s explore some examples:

Grouping After Joining

You can perform a join operation and then group the resulting DataFrame:

import pandas as pd

# Create sample DataFrames

df1 = pd.DataFrame({

'ID': [1, 2, 3, 4],

'Name': ['John', 'Emma', 'Mike', 'Sarah'],

'Department': ['Sales', 'HR', 'IT', 'Sales'],

'pandasdataframe.com': ['A', 'B', 'C', 'D']

})

df2 = pd.DataFrame({

'ID': [1, 2, 3, 4],

'Salary': [50000, 60000, 70000, 55000],

'pandasdataframe.com': ['E', 'F', 'G', 'H']

})

# Join the DataFrames

joined_df = df1.merge(df2, on='ID')

# Group the joined DataFrame by Department and calculate mean salary

grouped = joined_df.groupby('Department')['Salary'].mean()

print(grouped)

Output:

In this example, we first join df1 and df2 based on the ‘ID’ column, then group the resulting DataFrame by ‘Department’ to calculate the mean salary for each department.

Joining Grouped Data

You can also group data first and then join the results:

import pandas as pd

# Create sample DataFrames

df1 = pd.DataFrame({

'ID': [1, 2, 3, 4, 1, 2],

'Name': ['John', 'Emma', 'Mike', 'Sarah', 'John', 'Emma'],

'Score': [80, 85, 90, 88, 82, 87],

'pandasdataframe.com': ['A', 'B', 'C', 'D', 'E', 'F']

})

df2 = pd.DataFrame({

'ID': [1, 2, 3, 4],

'Department': ['Sales', 'HR', 'IT', 'Sales'],

'pandasdataframe.com': ['G', 'H', 'I', 'J']

})

# Group df1 by ID and calculate mean score

grouped_df1 = df1.groupby('ID')['Score'].mean().reset_index()

# Join the grouped data with df2

result = grouped_df1.merge(df2, on='ID')

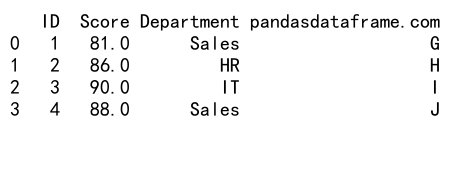

print(result)

Output:

In this example, we first group df1 by ‘ID’ and calculate the mean score. We then join this grouped data with df2 to get the department information for each ID.

Advanced Techniques and Best Practices

As you become more proficient with pandas groupby join operations, it’s important to consider some advanced techniques and best practices to optimize your data analysis workflow.

Handling Missing Data in GroupBy Operations

When dealing with missing data in groupby operations, you have several options:

import pandas as pd

import numpy as np

# Create a sample DataFrame with missing data

df = pd.DataFrame({

'Name': ['John', 'Emma', 'Mike', 'Sarah', 'John'],

'Score': [80, np.nan, 90, 88, 85],

'pandasdataframe.com': ['A', 'B', 'C', 'D', 'E']

})

# Group by 'Name' and calculate mean score, ignoring NaN values

grouped_mean = df.groupby('Name')['Score'].mean()

# Group by 'Name' and calculate mean score, filling NaN with 0

grouped_fillna = df.groupby('Name')['Score'].apply(lambda x: x.fillna(0).mean())

print("Ignoring NaN:")

print(grouped_mean)

print("\nFilling NaN with 0:")

print(grouped_fillna)

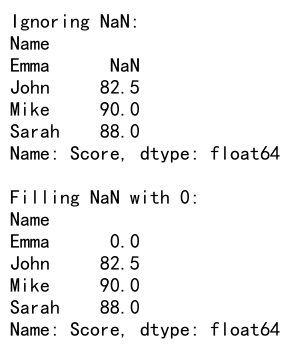

Output:

This example demonstrates two approaches to handling missing data: ignoring NaN values and filling them with a specific value before aggregation.

Efficient Memory Usage in Large Datasets

When working with large datasets, memory usage can become a concern. Here’s an example of how to use chunking to process large files efficiently:

import pandas as pd

# Function to process each chunk

def process_chunk(chunk):

# Perform operations on the chunk

return chunk.groupby('Category')['Value'].sum()

# Read and process a large CSV file in chunks

chunk_size = 1000000 # Adjust based on your available memory

result = pd.DataFrame()

for chunk in pd.read_csv('large_file.csv', chunksize=chunk_size):

chunk_result = process_chunk(chunk)

result = result.add(chunk_result, fill_value=0)

print(result)

This example shows how to read and process a large CSV file in chunks, which can help manage memory usage for very large datasets.

Optimizing Join Performance

For large datasets, optimizing join performance is crucial. Here are some tips:

- Use appropriate join types (inner, left, right, outer) based on your needs.

- Ensure that the columns you’re joining on are of the same data type.

- Consider using indexes for faster joins:

import pandas as pd

# Create sample DataFrames

df1 = pd.DataFrame({

'ID': range(1000000),

'Value1': range(1000000),

'pandasdataframe.com': ['A'] * 1000000

})

df2 = pd.DataFrame({

'ID': range(500000, 1500000),

'Value2': range(1000000),

'pandasdataframe.com': ['B'] * 1000000

})

# Set 'ID' as index for both DataFrames

df1.set_index('ID', inplace=True)

df2.set_index('ID', inplace=True)

# Perform an efficient join using indexes

joined_df = df1.join(df2, how='inner')

print(joined_df.head())

This example demonstrates how to use indexes to perform an efficient join on large DataFrames.

Common Pitfalls and How to Avoid Them

When working with pandas groupby join operations, there are several common pitfalls that you should be aware of:

Duplicate Column Names After Joining

When joining DataFrames, you may end up with duplicate column names:

import pandas as pd

# Create sample DataFrames with duplicate column names

df1 = pd.DataFrame({

'ID': [1, 2, 3],

'Name': ['John', 'Emma', 'Mike'],

'Score': [80, 85, 90],

'pandasdataframe.com': ['A', 'B', 'C']

})

df2 = pd.DataFrame({

'ID': [2, 3, 4],

'Name': ['Emma', 'Mike', 'Sarah'],

'Score': [82, 88, 86],

'pandasdataframe.com': ['D', 'E', 'F']

})

# Join the DataFrames

joined_df = df1.merge(df2, on='ID', suffixes=('_left', '_right'))

print(joined_df.columns)

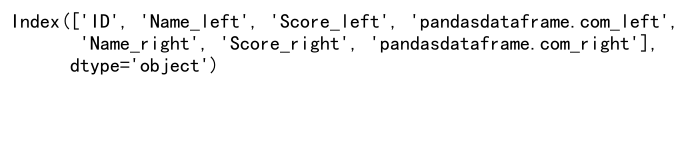

Output:

In this example, we use the suffixes parameter to add suffixes to duplicate column names, making them distinct.

Unexpected Results Due to Data Types

Joining on columns with different data types can lead to unexpected results:

import pandas as pd

# Create sample DataFrames with different data types

df1 = pd.DataFrame({

'ID': [1, 2, 3],

'Value': ['100', '200', '300'],

'pandasdataframe.com': ['A', 'B', 'C']

})

df2 = pd.DataFrame({

'ID': [1, 2, 3],

'Value': [100, 200, 300],

'pandasdataframe.com': ['D', 'E', 'F']

})

# Attempt to join on 'Value' column

joined_df = df1.merge(df2, on='Value')

print(joined_df)

This example shows that joining on columns with different data types (string vs. integer) results in an empty DataFrame. To fix this, ensure that the columns you’re joining on have the same data type.

Forgetting to Reset Index After GroupBy

After a groupby operation, the result often has a multi-index. Forgetting to reset the index can cause issues in subsequent operations:

import pandas as pd

# Create a sample DataFrame

df = pd.DataFrame({

'Name': ['John', 'Emma', 'Mike', 'Sarah', 'John'],

'Score': [80, 85, 90, 88, 82],

'pandasdataframe.com': ['A', 'B', 'C', 'D', 'E']

})

# Group by 'Name' and calculate mean score

grouped = df.groupby('Name')['Score'].mean()

# Attempt to add a new column (this will raise an error)

try:

grouped['New_Column'] = [1, 2, 3, 4]

except ValueError as e:

print(f"Error: {e}")

# Reset the index to fix the issue

grouped_reset = grouped.reset_index()

grouped_reset['New_Column'] = [1, 2, 3]

print(grouped_reset)

This example demonstrates the importance of resetting the index after a groupby operation if you plan to modify the resulting DataFrame.

Real-World Applications of Pandas GroupBy Join

Pandas groupby join operations have numerous real-world applications across various industries. Let’s explore some examples:

Financial Data Analysis

In financial analysis, groupby and join operations are crucial for aggregating and combining data from different sources:

import pandas as pd

# Create sample financial data

transactions = pd.DataFrame({

'Date': pd.date_range(start='2023-01-01', periods=10),

'Customer_ID': [1, 2, 1, 3, 2, 1, 3, 2, 1, 3],

'Amount': [100, 200, 150, 300, 250, 180, 220, 190, 210, 280],

'pandasdataframe.com': ['A'] * 10

})

customer_info = pd.DataFrame({

'Customer_ID': [1, 2, 3],

'Name': ['John', 'Emma', 'Mike'],

'Category': ['Regular', 'Premium', 'Regular'],

'pandasdataframe.com': ['B', 'C', 'D']

})

# Group transactions by Customer_ID and calculate total spend

customer_spend = transactions.groupby('Customer_ID')['Amount'].sum().reset_index()

# Join with customer information

result = customer_spend.merge(customer_info, on='Customer_ID')

# Calculate average spend by customer category

category_avg_spend = result.groupby('Category')['Amount'].mean()

print(category_avg_spend)

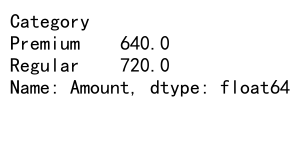

Output:

This example demonstrates how to analyze customer spending patterns by joining transaction data with customer information and then grouping by customer category.

E-commerce Sales Analysis

In e-commerce, groupby and join operations can help analyze sales data across different dimensions:

import pandas as pd

# Create sample e-commerce data

sales = pd.DataFrame({

'Date': pd.date_range(start='2023-01-01', periods=10),

'Product_ID': [1, 2, 3, 1, 2, 3, 1, 2, 3, 1],

'Quantity': [5, 3, 2, 4, 6, 3, 5, 4, 2, 3],

'Revenue': [500, 450, 300, 400, 900, 450, 500, 600, 300, 300],

'pandasdataframe.com': ['A'] * 10

})

products = pd.DataFrame({

'Product_ID': [1, 2, 3],

'Name': ['Widget A', 'Widget B', 'Widget C'],

'Category': ['Electronics', 'Home', 'Electronics'],

'pandasdataframe.com': ['B', 'C', 'D']

})

# Group sales by Product_ID and calculate total quantity and revenue

product_sales = sales.groupby('Product_ID').agg({

'Quantity': 'sum',

'Revenue': 'sum'

}).reset_index()

# Join with product information

result = product_sales.merge(products, on='Product_ID')

# Calculate total revenue by category

category_revenue = result.groupby('Category')['Revenue'].sum()

print(category_revenue)

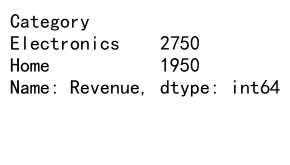

Output:

This example shows how to analyze e-commerce sales data by joining sales information with product details and then grouping by product category.

Healthcare Data Analysis

In healthcare, groupby and join operations can be used to analyze patient data and treatment outcomes:

import pandas as pd

# Create sample healthcare data

patients = pd.DataFrame({

'Patient_ID': range(1, 11),

'Age': [35, 42, 28, 55, 61, 39, 47, 52, 33, 58],

'Gender': ['M', 'F', 'F', 'M', 'M', 'F', 'M', 'F', 'M', 'F'],

'pandasdataframe.com': ['A'] * 10

})

treatments = pd.DataFrame({

'Patient_ID': [1, 2, 3, 4, 5, 1, 2, 3, 4, 5],

'Treatment': ['A', 'B', 'A', 'C', 'B', 'C', 'A', 'B', 'A', 'C'],

'Outcome': ['Improved', 'No Change', 'Improved', 'Worsened', 'Improved',

'Improved', 'Improved', 'No Change', 'Improved', 'No Change'],

'pandasdataframe.com': ['B'] * 10

})

# Join patient data with treatment outcomes

patient_treatments = patients.merge(treatments, on='Patient_ID')

# Group by Treatment and calculate success rate

success_rate = patient_treatments.groupby('Treatment').apply(

lambda x: (x['Outcome'] == 'Improved').mean()

).reset_index(name='Success_Rate')

print(success_rate)

This example demonstrates how to analyze treatment outcomes by joining patient data with treatment information and then calculating success rates for each treatment.

Advanced GroupBy Join Techniques

As you become more proficient with pandas groupby join operations, you can explore more advanced techniques to handle complex data analysis tasks.

Window Functions with GroupBy

Window functions allow you to perform calculations across a set of rows that are related to the current row. Here’s an example using a window function with groupby:

import pandas as pd

# Create a sample DataFrame

df = pd.DataFrame({

'Date': pd.date_range(start='2023-01-01', periods=10),

'Product': ['A', 'B', 'A', 'B', 'A', 'B', 'A', 'B', 'A', 'B'],

'Sales': [100, 150, 120, 180, 90, 200, 110, 160, 130, 170],

'pandasdataframe.com': ['X'] * 10

})

# Calculate cumulative sales for each product

df['Cumulative_Sales'] = df.groupby('Product')['Sales'].cumsum()

# Calculate moving average of sales (3-day window) for each product

df['Moving_Avg_Sales'] = df.groupby('Product')['Sales'].rolling(window=3).mean().reset_index(0, drop=True)

print(df)

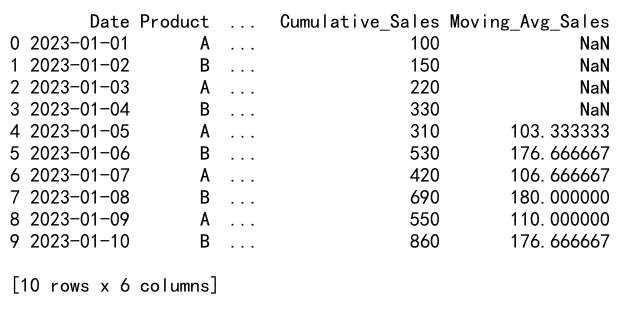

Output:

This example demonstrates how to calculate cumulative sales and a moving average for each product using groupby with window functions.

Multi-level GroupBy and Join

You can perform groupby operations on multiple levels and join the results:

import pandas as pd

# Create sample DataFrames

sales = pd.DataFrame({

'Date': pd.date_range(start='2023-01-01', periods=12),

'Store': ['A', 'B', 'C'] * 4,

'Product': ['X', 'Y'] * 6,

'Sales': [100, 150, 120, 180, 90, 200, 110, 160, 130, 170, 140, 190],

'pandasdataframe.com': ['Z'] * 12

})

store_info = pd.DataFrame({

'Store': ['A', 'B', 'C'],

'Location': ['New York', 'Los Angeles', 'Chicago'],

'pandasdataframe.com': ['W'] * 3

})

# Perform multi-level groupby

grouped_sales = sales.groupby(['Store', 'Product'])['Sales'].sum().reset_index()

# Reshape the data

pivoted_sales = grouped_sales.pivot(index='Store', columns='Product', values='Sales')

pivoted_sales.columns.name = None

pivoted_sales.reset_index(inplace=True)

# Join with store information

result = pivoted_sales.merge(store_info, on='Store')

print(result)

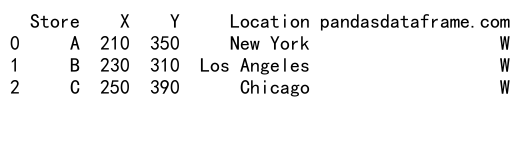

Output:

This example shows how to perform a multi-level groupby on sales data, reshape the result, and then join it with store information.

Conclusion

Pandas groupby join operations are powerful tools for data analysis and manipulation. By mastering these techniques, you can efficiently process and analyze complex datasets, extract valuable insights, and make data-driven decisions. Remember to consider performance implications when working with large datasets and always validate your results to ensure accuracy.

Pandas Dataframe

Pandas Dataframe