Mastering Pandas GroupBy First: A Comprehensive Guide to Efficient Data Analysis

Pandas groupby first is a powerful technique in data analysis that allows you to extract the first occurrence of values within grouped data. This method is particularly useful when working with large datasets and you need to summarize information based on specific criteria. In this comprehensive guide, we’ll explore the ins and outs of pandas groupby first, providing detailed explanations and practical examples to help you master this essential tool in your data analysis toolkit.

Understanding Pandas GroupBy First

Pandas groupby first is a combination of two key concepts in pandas: groupby and the first aggregation function. The groupby operation allows you to split your data into groups based on one or more columns, while the first function selects the first occurrence of values within each group. This powerful combination enables you to efficiently summarize and analyze your data in various ways.

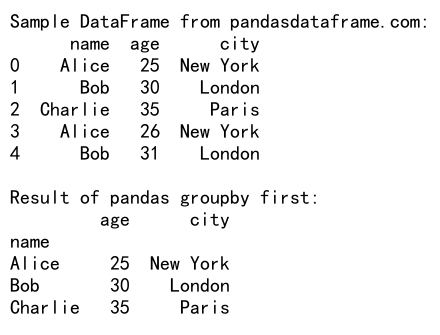

Let’s start with a simple example to illustrate how pandas groupby first works:

import pandas as pd

# Create a sample DataFrame

df = pd.DataFrame({

'name': ['Alice', 'Bob', 'Charlie', 'Alice', 'Bob'],

'age': [25, 30, 35, 26, 31],

'city': ['New York', 'London', 'Paris', 'New York', 'London']

})

# Apply pandas groupby first

result = df.groupby('name').first()

print("Sample DataFrame from pandasdataframe.com:")

print(df)

print("\nResult of pandas groupby first:")

print(result)

Output:

In this example, we create a simple DataFrame with names, ages, and cities. We then use pandas groupby first to group the data by name and select the first occurrence of each person’s information. The result is a new DataFrame containing the first entry for each unique name.

Benefits of Using Pandas GroupBy First

Pandas groupby first offers several advantages when working with data:

- Efficient data summarization: It allows you to quickly summarize large datasets by selecting the first occurrence of values within groups.

- Flexibility: You can group data by one or multiple columns, providing versatile analysis options.

- Easy integration: It seamlessly integrates with other pandas functions and methods for comprehensive data manipulation.

- Performance: Pandas groupby first is optimized for speed, making it suitable for large datasets.

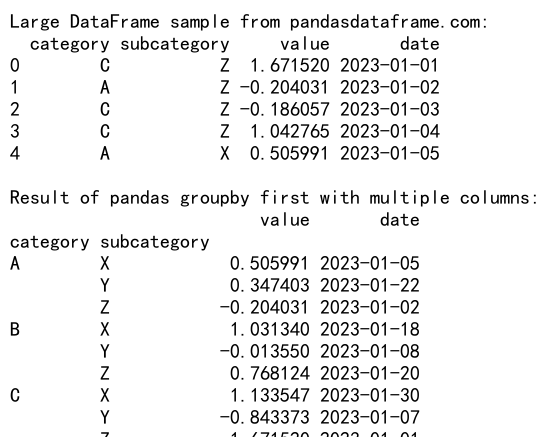

Let’s explore a more complex example to demonstrate these benefits:

import pandas as pd

import numpy as np

# Create a larger sample DataFrame

np.random.seed(42)

df = pd.DataFrame({

'category': np.random.choice(['A', 'B', 'C'], 1000),

'subcategory': np.random.choice(['X', 'Y', 'Z'], 1000),

'value': np.random.randn(1000),

'date': pd.date_range(start='2023-01-01', periods=1000)

})

# Apply pandas groupby first with multiple columns

result = df.groupby(['category', 'subcategory']).first()

print("Large DataFrame sample from pandasdataframe.com:")

print(df.head())

print("\nResult of pandas groupby first with multiple columns:")

print(result)

Output:

In this example, we create a larger DataFrame with categories, subcategories, values, and dates. We then use pandas groupby first to group the data by both category and subcategory, selecting the first occurrence of each combination. This demonstrates how pandas groupby first can efficiently summarize complex datasets with multiple grouping criteria.

Key Concepts in Pandas GroupBy First

To fully understand and utilize pandas groupby first, it’s essential to grasp several key concepts:

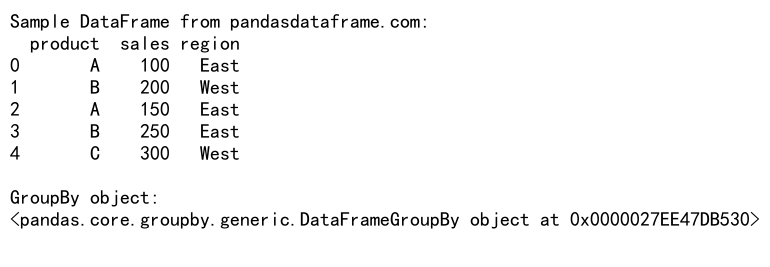

1. GroupBy Object

The groupby operation in pandas returns a GroupBy object, which is a powerful tool for data analysis. This object allows you to perform various operations on grouped data, including aggregation, transformation, and filtering.

Here’s an example of creating a GroupBy object:

import pandas as pd

# Create a sample DataFrame

df = pd.DataFrame({

'product': ['A', 'B', 'A', 'B', 'C'],

'sales': [100, 200, 150, 250, 300],

'region': ['East', 'West', 'East', 'East', 'West']

})

# Create a GroupBy object

grouped = df.groupby('product')

print("Sample DataFrame from pandasdataframe.com:")

print(df)

print("\nGroupBy object:")

print(grouped)

Output:

In this example, we create a GroupBy object by grouping the DataFrame by the ‘product’ column. This object can then be used for various operations, including the first aggregation.

2. Aggregation Functions

Pandas provides numerous aggregation functions that can be applied to grouped data. The ‘first’ function is one of these aggregation functions, but there are many others, such as ‘sum’, ‘mean’, ‘max’, ‘min’, etc.

Let’s compare the ‘first’ function with other aggregation functions:

import pandas as pd

# Create a sample DataFrame

df = pd.DataFrame({

'product': ['A', 'B', 'A', 'B', 'C'],

'sales': [100, 200, 150, 250, 300],

'region': ['East', 'West', 'East', 'East', 'West']

})

# Apply different aggregation functions

first_result = df.groupby('product').first()

sum_result = df.groupby('product').sum()

mean_result = df.groupby('product').mean()

print("Sample DataFrame from pandasdataframe.com:")

print(df)

print("\nFirst aggregation result:")

print(first_result)

print("\nSum aggregation result:")

print(sum_result)

print("\nMean aggregation result:")

print(mean_result)

This example demonstrates how different aggregation functions can be applied to the same grouped data, producing different results based on the specific function used.

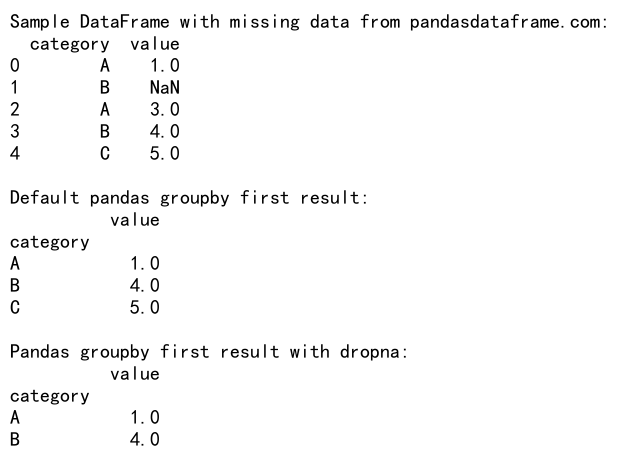

3. Handling Missing Data

When using pandas groupby first, it’s important to consider how missing data is handled. By default, pandas groupby first will include NaN values if they are the first occurrence in a group. However, you can modify this behavior using various techniques.

Here’s an example of handling missing data with pandas groupby first:

import pandas as pd

import numpy as np

# Create a sample DataFrame with missing data

df = pd.DataFrame({

'category': ['A', 'B', 'A', 'B', 'C'],

'value': [1, np.nan, 3, 4, 5]

})

# Apply pandas groupby first with default behavior

default_result = df.groupby('category').first()

# Apply pandas groupby first with dropna

dropna_result = df.groupby('category').first().dropna()

print("Sample DataFrame with missing data from pandasdataframe.com:")

print(df)

print("\nDefault pandas groupby first result:")

print(default_result)

print("\nPandas groupby first result with dropna:")

print(dropna_result)

Output:

In this example, we demonstrate how pandas groupby first handles missing data by default and how you can modify this behavior using the dropna() method.

Advanced Techniques with Pandas GroupBy First

Now that we’ve covered the basics, let’s explore some advanced techniques using pandas groupby first:

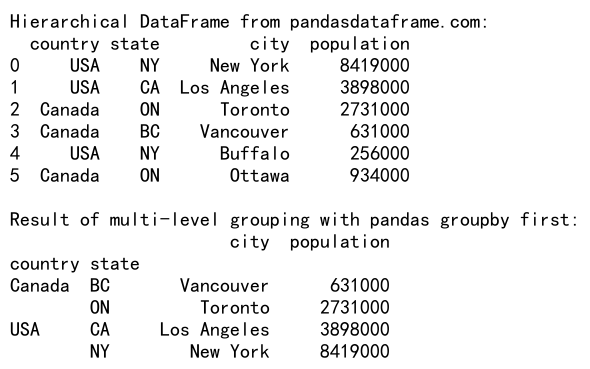

1. Multi-level Grouping

Pandas groupby first supports multi-level grouping, allowing you to group data by multiple columns simultaneously. This is particularly useful when dealing with hierarchical data structures.

Here’s an example of multi-level grouping with pandas groupby first:

import pandas as pd

# Create a sample DataFrame with hierarchical data

df = pd.DataFrame({

'country': ['USA', 'USA', 'Canada', 'Canada', 'USA', 'Canada'],

'state': ['NY', 'CA', 'ON', 'BC', 'NY', 'ON'],

'city': ['New York', 'Los Angeles', 'Toronto', 'Vancouver', 'Buffalo', 'Ottawa'],

'population': [8419000, 3898000, 2731000, 631000, 256000, 934000]

})

# Apply multi-level grouping with pandas groupby first

result = df.groupby(['country', 'state']).first()

print("Hierarchical DataFrame from pandasdataframe.com:")

print(df)

print("\nResult of multi-level grouping with pandas groupby first:")

print(result)

Output:

This example demonstrates how to use pandas groupby first with multiple columns to create a hierarchical summary of the data.

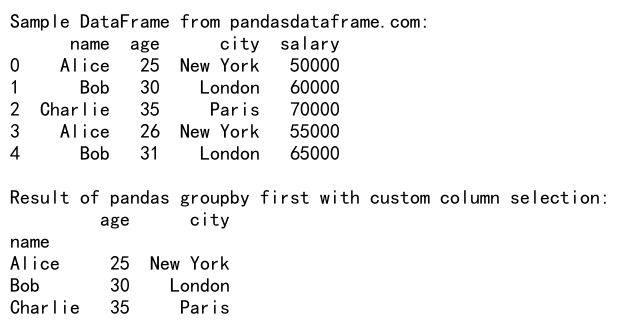

2. Customizing Column Selection

When using pandas groupby first, you can customize which columns are included in the result by specifying them explicitly.

Here’s an example of customizing column selection with pandas groupby first:

import pandas as pd

# Create a sample DataFrame

df = pd.DataFrame({

'name': ['Alice', 'Bob', 'Charlie', 'Alice', 'Bob'],

'age': [25, 30, 35, 26, 31],

'city': ['New York', 'London', 'Paris', 'New York', 'London'],

'salary': [50000, 60000, 70000, 55000, 65000]

})

# Apply pandas groupby first with custom column selection

result = df.groupby('name').first()[['age', 'city']]

print("Sample DataFrame from pandasdataframe.com:")

print(df)

print("\nResult of pandas groupby first with custom column selection:")

print(result)

Output:

In this example, we use pandas groupby first to group the data by name and then select only the ‘age’ and ‘city’ columns in the result.

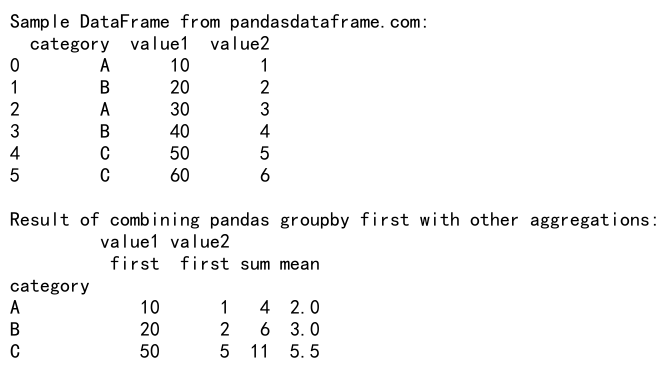

3. Combining GroupBy First with Other Aggregations

Pandas groupby first can be combined with other aggregation functions to create more complex summaries of your data.

Here’s an example of combining pandas groupby first with other aggregations:

import pandas as pd

# Create a sample DataFrame

df = pd.DataFrame({

'category': ['A', 'B', 'A', 'B', 'C', 'C'],

'value1': [10, 20, 30, 40, 50, 60],

'value2': [1, 2, 3, 4, 5, 6]

})

# Combine pandas groupby first with other aggregations

result = df.groupby('category').agg({

'value1': 'first',

'value2': ['first', 'sum', 'mean']

})

print("Sample DataFrame from pandasdataframe.com:")

print(df)

print("\nResult of combining pandas groupby first with other aggregations:")

print(result)

Output:

This example shows how to use pandas groupby first in combination with other aggregation functions like sum and mean to create a more comprehensive summary of the data.

Practical Applications of Pandas GroupBy First

Pandas groupby first has numerous practical applications in data analysis and manipulation. Let’s explore some common use cases:

1. Time Series Analysis

In time series analysis, pandas groupby first can be used to extract the first occurrence of events within specific time periods.

Here’s an example of using pandas groupby first for time series analysis:

import pandas as pd

# Create a sample time series DataFrame

df = pd.DataFrame({

'date': pd.date_range(start='2023-01-01', periods=10),

'category': ['A', 'B', 'A', 'B', 'C', 'A', 'B', 'C', 'A', 'B'],

'value': [10, 20, 30, 40, 50, 60, 70, 80, 90, 100]

})

# Extract the first occurrence of each category per month

result = df.groupby([df['date'].dt.to_period('M'), 'category']).first().reset_index()

print("Time series DataFrame from pandasdataframe.com:")

print(df)

print("\nResult of pandas groupby first for time series analysis:")

print(result)

This example demonstrates how to use pandas groupby first to extract the first occurrence of each category within each month of a time series dataset.

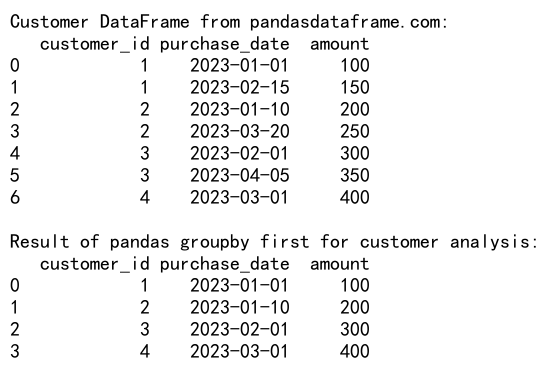

2. Customer Analysis

In customer analysis, pandas groupby first can be used to extract the first purchase or interaction of each customer.

Here’s an example of using pandas groupby first for customer analysis:

import pandas as pd

# Create a sample customer DataFrame

df = pd.DataFrame({

'customer_id': [1, 1, 2, 2, 3, 3, 4],

'purchase_date': pd.to_datetime(['2023-01-01', '2023-02-15', '2023-01-10', '2023-03-20', '2023-02-01', '2023-04-05', '2023-03-01']),

'amount': [100, 150, 200, 250, 300, 350, 400]

})

# Extract the first purchase of each customer

result = df.groupby('customer_id').first().reset_index()

print("Customer DataFrame from pandasdataframe.com:")

print(df)

print("\nResult of pandas groupby first for customer analysis:")

print(result)

Output:

This example shows how to use pandas groupby first to extract the first purchase information for each customer, which can be useful for analyzing customer acquisition and initial behavior.

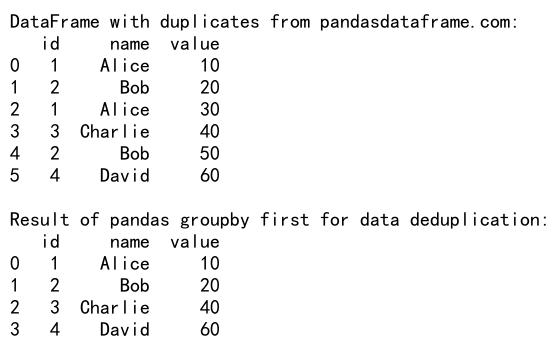

3. Data Deduplication

Pandas groupby first can be an effective tool for data deduplication, especially when you want to keep the first occurrence of duplicate entries.

Here’s an example of using pandas groupby first for data deduplication:

import pandas as pd

# Create a sample DataFrame with duplicate entries

df = pd.DataFrame({

'id': [1, 2, 1, 3, 2, 4],

'name': ['Alice', 'Bob', 'Alice', 'Charlie', 'Bob', 'David'],

'value': [10, 20, 30, 40, 50, 60]

})

# Remove duplicates keeping the first occurrence

result = df.groupby('id').first().reset_index()

print("DataFrame with duplicates from pandasdataframe.com:")

print(df)

print("\nResult of pandas groupby first for data deduplication:")

print(result)

Output:

This example demonstrates how to use pandas groupby first to remove duplicate entries based on the ‘id’ column while keeping the first occurrence of each unique id.

Best Practices and Performance Considerations

When working with pandas groupby first, it’s important to keep in mind some best practices and performance considerations:

- Index optimization: If you frequently group by the same column(s), consider setting them as the index of your DataFrame to improve performance.

-

Memory management: For large datasets, consider using chunking or iterating over groups to reduce memory usage.

-

Combining operations: When possible, combine multiple operations into a single groupby to improve efficiency.

-

Using categorical data: Convert string columns to categorical data type for better performance, especially with large datasets.

Here’s an example incorporating some of these best practices:

import pandas as pd

# Create a large sample DataFrame

df = pd.DataFrame({

'category': pd.Categorical(pd.np.random.choice(['A', 'B', 'C'], 1000000)),

'value': pd.np.random.randn(1000000)

})

# Set 'category' as index for better performance

df.set_index('category', inplace=True)

# Perform pandas groupby first operation

result = df.groupby(level=0).first()

print("Large DataFrame sample from pandasdataframe.com:")

print(df.head())

print("\nResult of optimized pandas groupby first:")

print(result)

This example demonstrates how to optimize a large DataFrame for pandas groupby first operations by using categorical data and setting the grouping column as the index.

Common Pitfalls and How to Avoid Them

When using pandas groupby first, there are some common pitfalls that you should be aware of:

- Unexpected results with non-numeric data: The ‘first’ function may produce unexpected results with non-numeric data, especially when combined with other aggregations.

-

Ignoring the index: After applying groupby first, the resulting DataFrame’s index may change, which can lead to confusion if not handled properly.

-

Forgetting to reset the index: In some cases, you may need to reset the index after applying groupby first to maintain the desired structure of your data.

Here’s an example illustrating these pitfalls and how to avoid them:

import pandas as pd

# Create a sample DataFrame with mixed data types

df = pd.DataFrame({

'category': ['A', 'B', 'A', 'B', 'C'],

'numeric': [1, 2, 3, 4, 5],

'text': ['apple', 'banana', 'cherry', 'date', 'elderberry']

})

# Apply pandas groupby first

result = df.groupby('category').first()

# Reset index to avoid confusion

result_reset = result.reset_index()

print("Sample DataFrame from pandasdataframe.com:")

print(df)

print("\nResult of pandas groupby first:")

print(result)

print("\nResult with reset index:")

print(result_reset)

In this example, we demonstrate how to handle mixed data types and reset the index after applying pandas groupby first to maintain a clear and consistent data structure.

Advanced Features of Pandas GroupBy First

Let’s explore some advanced features of pandas groupby first that can enhance your data analysis capabilities:

1. Using Custom Aggregation Functions

While the built-in ‘first’ function is useful, you can also create custom aggregation functions to use with groupby operations.

Here’s an example of using a custom aggregation function with pandas groupby:

import pandas as pd

# Create a sample DataFrame

df = pd.DataFrame({

'category': ['A', 'B', 'A', 'B', 'C'],

'value1': [10, 20, 30, 40, 50],

'value2': [1, 2, 3, 4, 5]

})

# Define a custom aggregation function

def custom_first(x):

return x.iloc[0] if len(x) > 0 else pd.NA

# Apply custom aggregation function with groupby

result = df.groupby('category').agg({

'value1': custom_first,

'value2': 'first' # Using built-in 'first' for comparison

})

print("Sample DataFrame from pandasdataframe.com:")

print(df)

print("\nResult of groupby with custom aggregation function:")

print(result)

This example demonstrates how to create and use a custom aggregation function alongside the built-in ‘first’ function in a pandas groupby operation.

2. Handling Time-based Data

When working with time-based data, pandas groupby first can be particularly useful for extracting the first occurrence within specific time periods.

Here’s an example of using pandas groupby first with time-based data:

import pandas as pd

# Create a sample DataFrame with time-based data

df = pd.DataFrame({

'timestamp': pd.date_range(start='2023-01-01', periods=10, freq='H'),

'category': ['A', 'B', 'A', 'B', 'C', 'A', 'B', 'C', 'A', 'B'],

'value': [10, 20, 30, 40, 50, 60, 70, 80, 90, 100]

})

# Extract the first occurrence for each category per day

result = df.groupby([df['timestamp'].dt.date, 'category']).first().reset_index()

print("Time-based DataFrame from pandasdataframe.com:")

print(df)

print("\nResult of pandas groupby first with time-based data:")

print(result)

This example shows how to use pandas groupby first to extract the first occurrence of each category for each day in a time-based dataset.

3. Combining GroupBy First with Window Functions

Pandas groupby first can be combined with window functions to perform more complex analyses on your data.

Here’s an example of combining pandas groupby first with a window function:

import pandas as pd

# Create a sample DataFrame

df = pd.DataFrame({

'category': ['A', 'B', 'A', 'B', 'C', 'A', 'B', 'C'],

'value': [10, 20, 30, 40, 50, 60, 70, 80]

})

# Combine groupby first with a window function

df['first_value'] = df.groupby('category')['value'].transform('first')

df['running_max'] = df.groupby('category')['value'].cummax()

print("Sample DataFrame from pandasdataframe.com:")

print(df)

This example demonstrates how to combine pandas groupby first with a window function (cummax) to calculate both the first value and the running maximum for each category.

Comparing Pandas GroupBy First with Other Methods

While pandas groupby first is a powerful tool, it’s important to understand how it compares to other methods for similar tasks. Let’s compare it with some alternatives:

1. Pandas GroupBy First vs. Drop Duplicates

Both pandas groupby first and drop_duplicates can be used for deduplication, but they have different use cases and performance characteristics.

Here’s a comparison:

import pandas as pd

# Create a sample DataFrame with duplicates

df = pd.DataFrame({

'category': ['A', 'B', 'A', 'B', 'C', 'A', 'B', 'C'],

'value': [10, 20, 30, 40, 50, 60, 70, 80]

})

# Using pandas groupby first

result_groupby = df.groupby('category').first().reset_index()

# Using drop_duplicates

result_dropdup = df.drop_duplicates(subset='category')

print("Sample DataFrame from pandasdataframe.com:")

print(df)

print("\nResult using pandas groupby first:")

print(result_groupby)

print("\nResult using drop_duplicates:")

print(result_dropdup)

This example compares the results of using pandas groupby first and drop_duplicates for deduplication. While both methods achieve similar results, groupby first offers more flexibility for complex aggregations.

2. Pandas GroupBy First vs. Indexing

In some cases, you might be tempted to use indexing to achieve similar results to pandas groupby first. However, groupby first is often more efficient and flexible.

Here’s a comparison:

import pandas as pd

# Create a sample DataFrame

df = pd.DataFrame({

'category': ['A', 'B', 'A', 'B', 'C', 'A', 'B', 'C'],

'value': [10, 20, 30, 40, 50, 60, 70, 80]

})

# Using pandas groupby first

result_groupby = df.groupby('category').first().reset_index()

# Using indexing

df_sorted = df.sort_values('category')

result_indexing = df_sorted.loc[df_sorted['category'].drop_duplicates().index]

print("Sample DataFrame from pandasdataframe.com:")

print(df)

print("\nResult using pandas groupby first:")

print(result_groupby)

print("\nResult using indexing:")

print(result_indexing)

This example compares pandas groupby first with an indexing-based approach. While both methods produce similar results, groupby first is more intuitive and often more efficient, especially for larger datasets.

Optimizing Pandas GroupBy First for Large Datasets

When working with large datasets, optimizing your pandas groupby first operations becomes crucial. Here are some techniques to improve performance:

1. Using Categorical Data Types

Converting string columns to categorical data types can significantly improve the performance of groupby operations, including groupby first.

Here’s an example:

import pandas as pd

import numpy as np

# Create a large sample DataFrame

n = 1000000

df = pd.DataFrame({

'category': np.random.choice(['A', 'B', 'C', 'D', 'E'], n),

'value': np.random.randn(n)

})

# Convert 'category' to categorical

df['category'] = pd.Categorical(df['category'])

# Apply pandas groupby first

result = df.groupby('category').first()

print("Large DataFrame sample from pandasdataframe.com:")

print(df.head())

print("\nResult of pandas groupby first with categorical data:")

print(result)

This example demonstrates how to use categorical data types to optimize pandas groupby first operations on large datasets.

2. Chunking Large Datasets

For extremely large datasets that don’t fit in memory, you can use chunking to process the data in smaller pieces.

Here’s an example of using chunking with pandas groupby first:

import pandas as pd

# Function to process chunks

def process_chunk(chunk):

return chunk.groupby('category').first()

# Read and process data in chunks

chunk_size = 100000

result = pd.DataFrame()

for chunk in pd.read_csv('large_dataset.csv', chunksize=chunk_size):

chunk_result = process_chunk(chunk)

result = pd.concat([result, chunk_result])

# Remove duplicates from the final result

result = result.groupby(result.index).first()

print("Result of pandas groupby first with chunking from pandasdataframe.com:")

print(result.head())

This example shows how to use chunking to process a large dataset that doesn’t fit in memory, applying pandas groupby first to each chunk and then combining the results.

Conclusion

Pandas groupby first is a powerful and versatile tool for data analysis and manipulation. Throughout this comprehensive guide, we’ve explored its fundamental concepts, advanced techniques, practical applications, and optimization strategies. By mastering pandas groupby first, you’ll be able to efficiently summarize and analyze complex datasets, extract valuable insights, and streamline your data processing workflows.

Pandas Dataframe

Pandas Dataframe